Deterministic, explainable AI for precision SecOps

How multi‑model AI makes LLMs ready for production

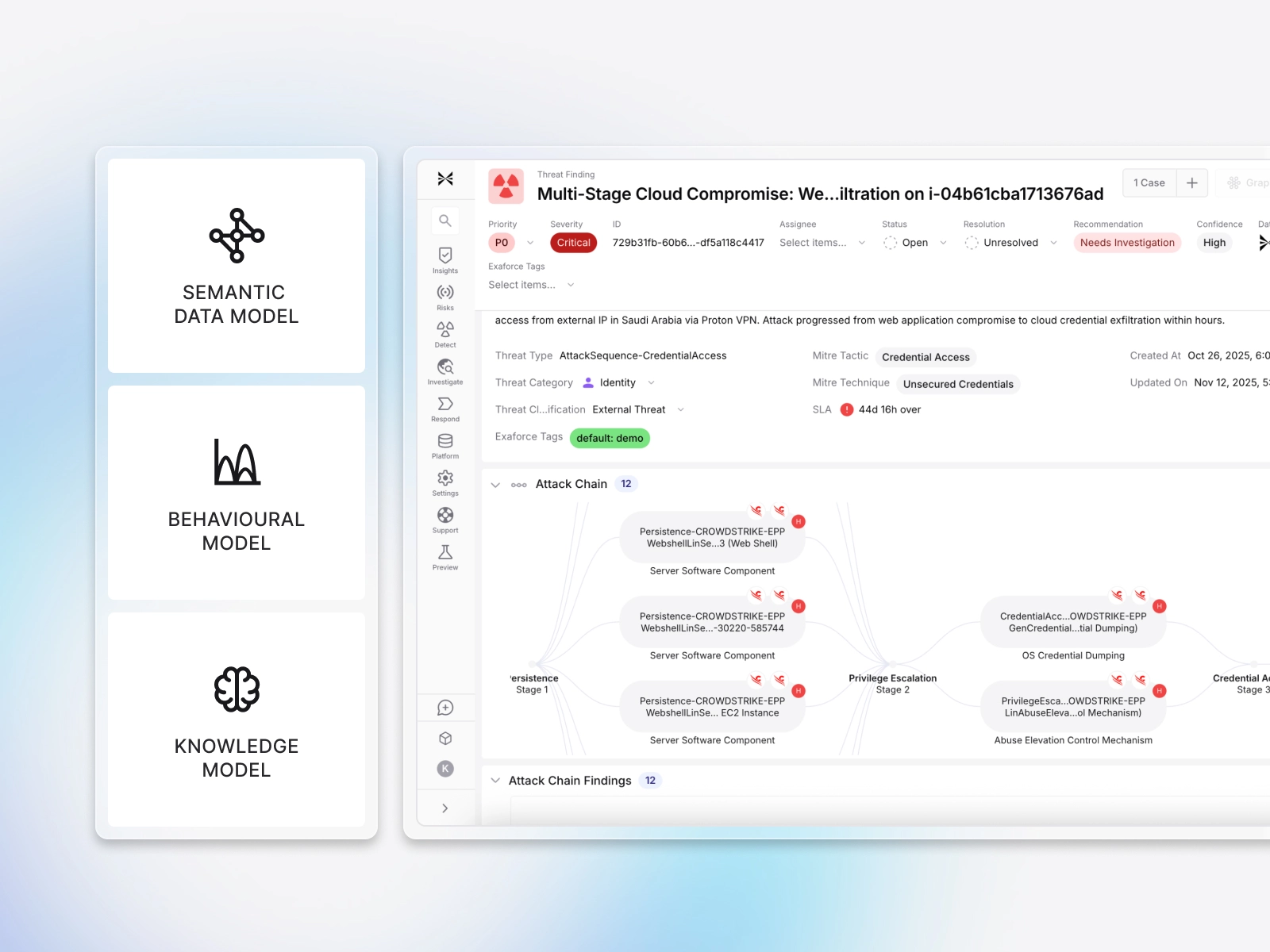

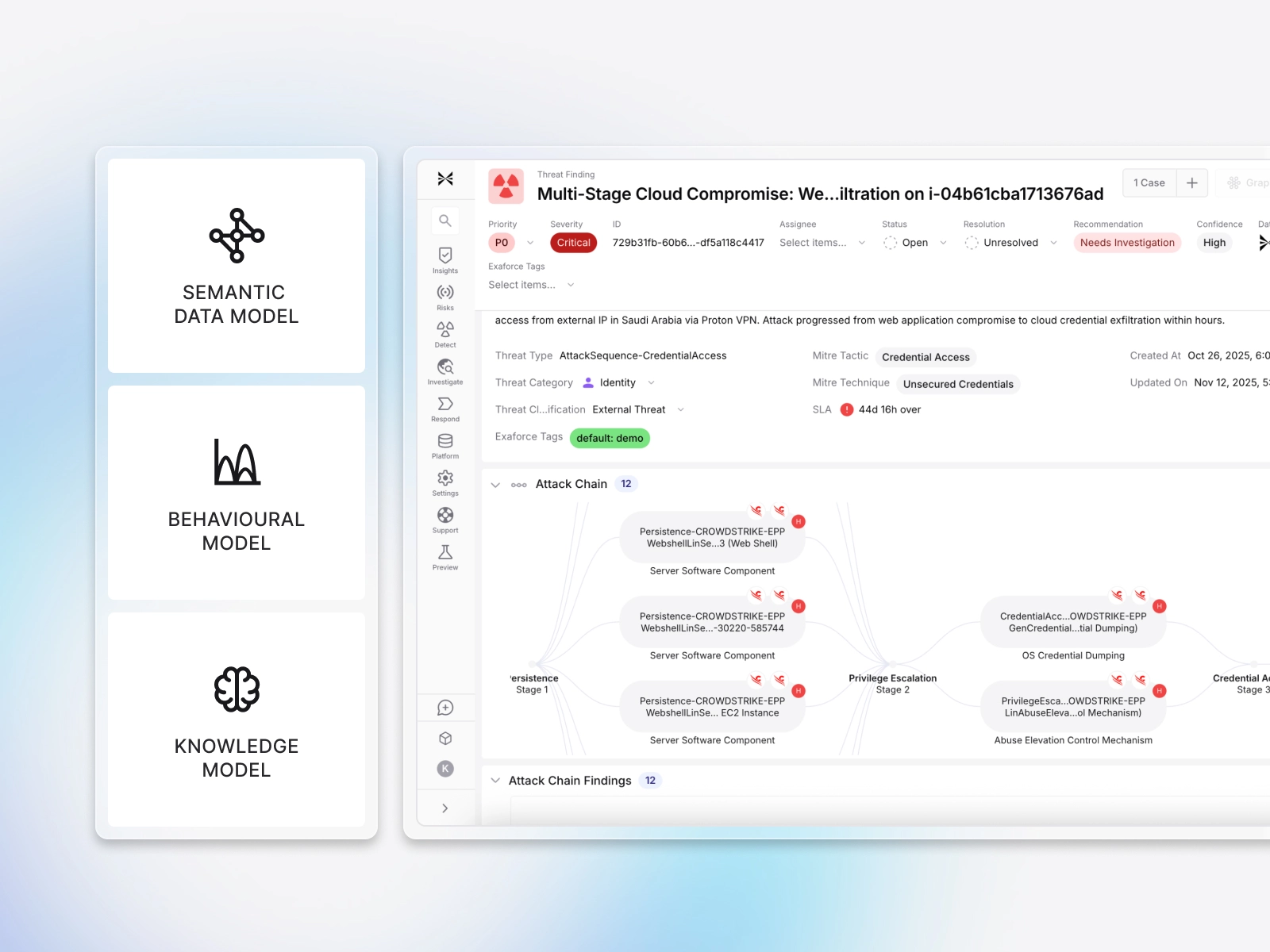

Each model brings specialized expertise. Semantic resolves entities and relationships, Behavioral defines normal activity, and Knowledge converts findings into actionable intelligence. Together, they deliver repeatable, high-fidelity outcomes without the guesswork or cost of LLM-only approaches.

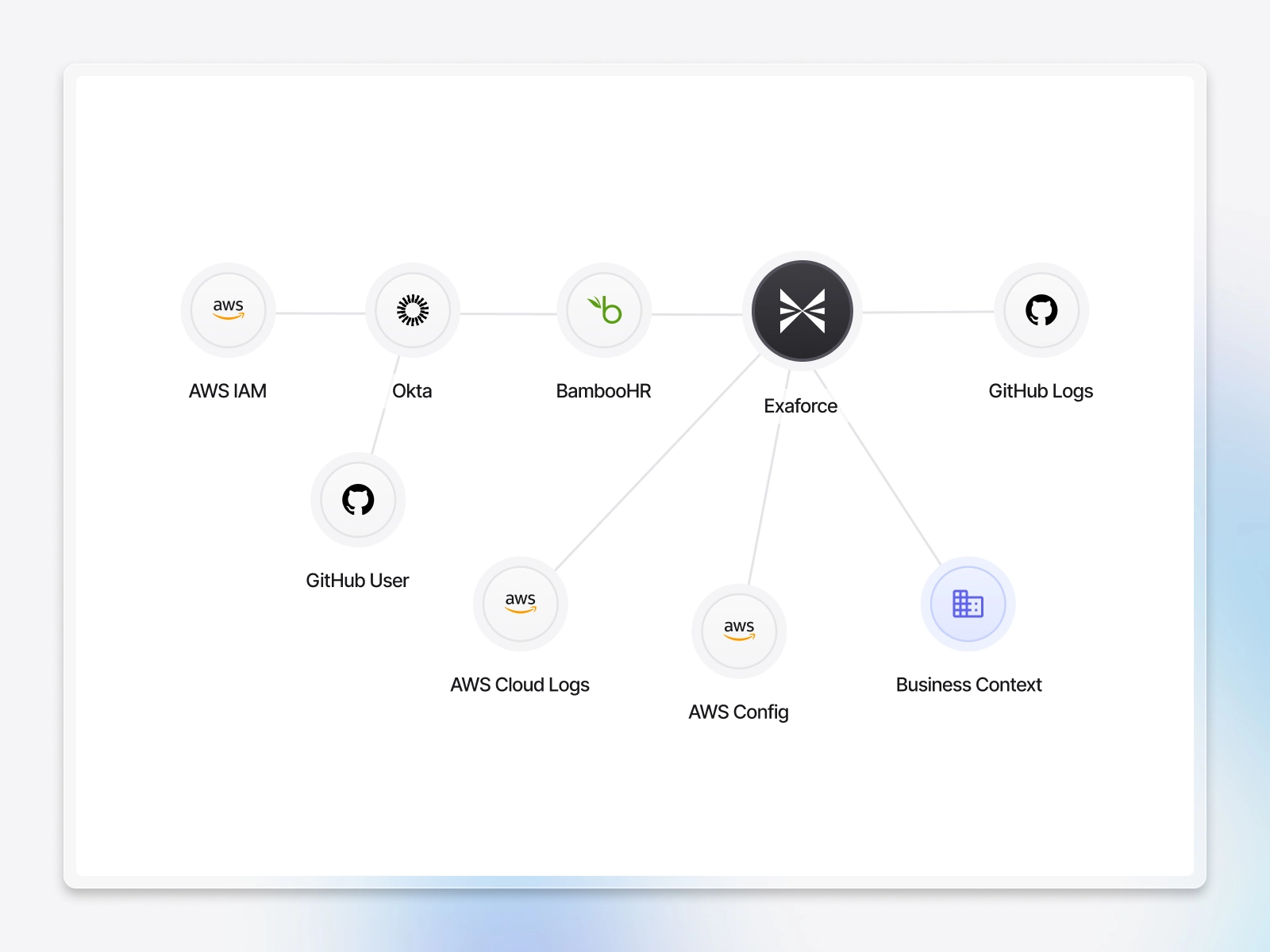

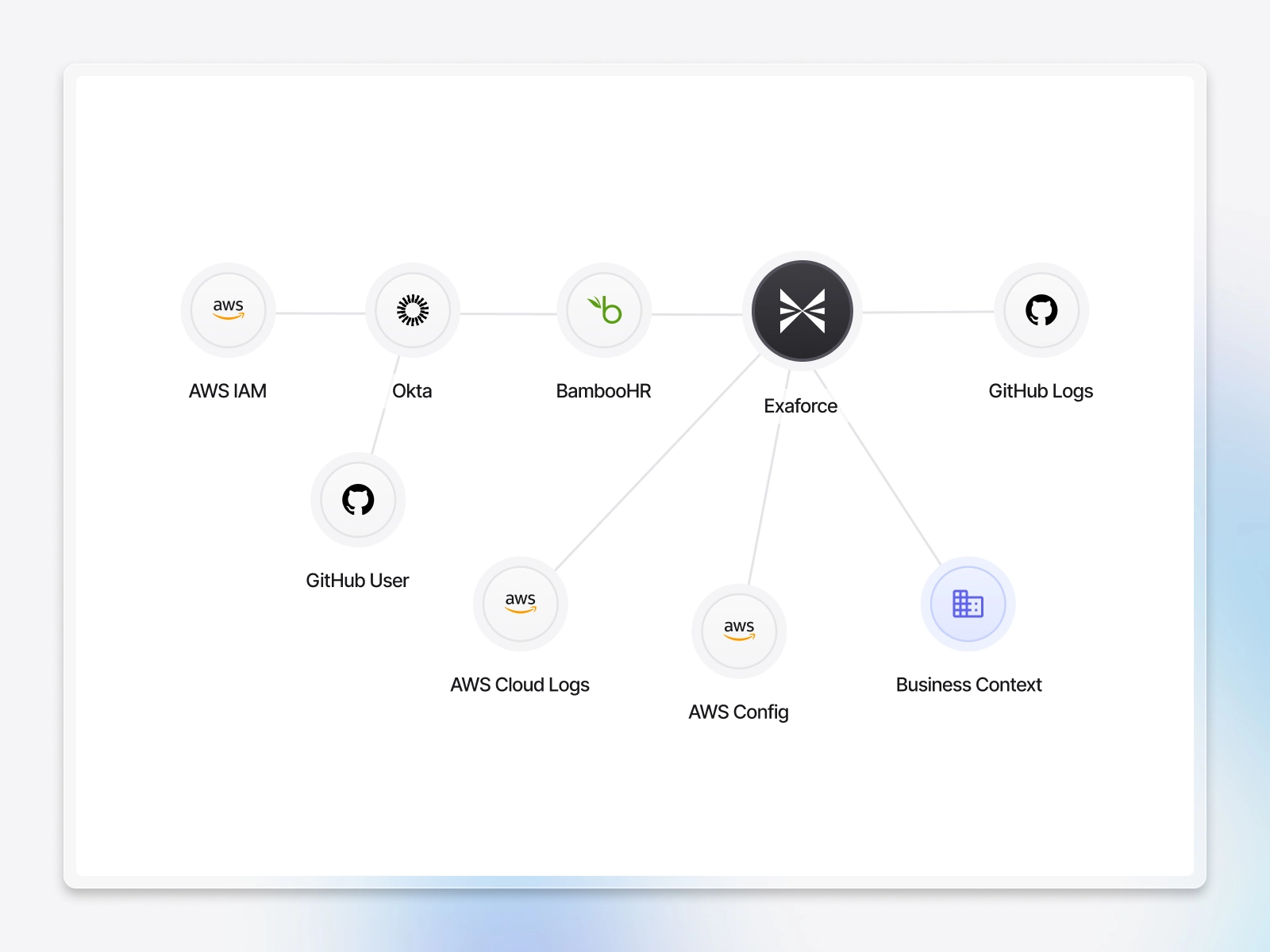

Structured context available when needed

The Semantic Model builds and maintains relationships between identities, resources, and actions, creating a living map of your environment for fast, precise reasoning. It stores context in a structured form, so the system interprets intent, understands dependencies, and reacts with clarity instead of guesswork. You gain faster decisions, higher fidelity outputs, and a foundation that strengthens as complexity grows.

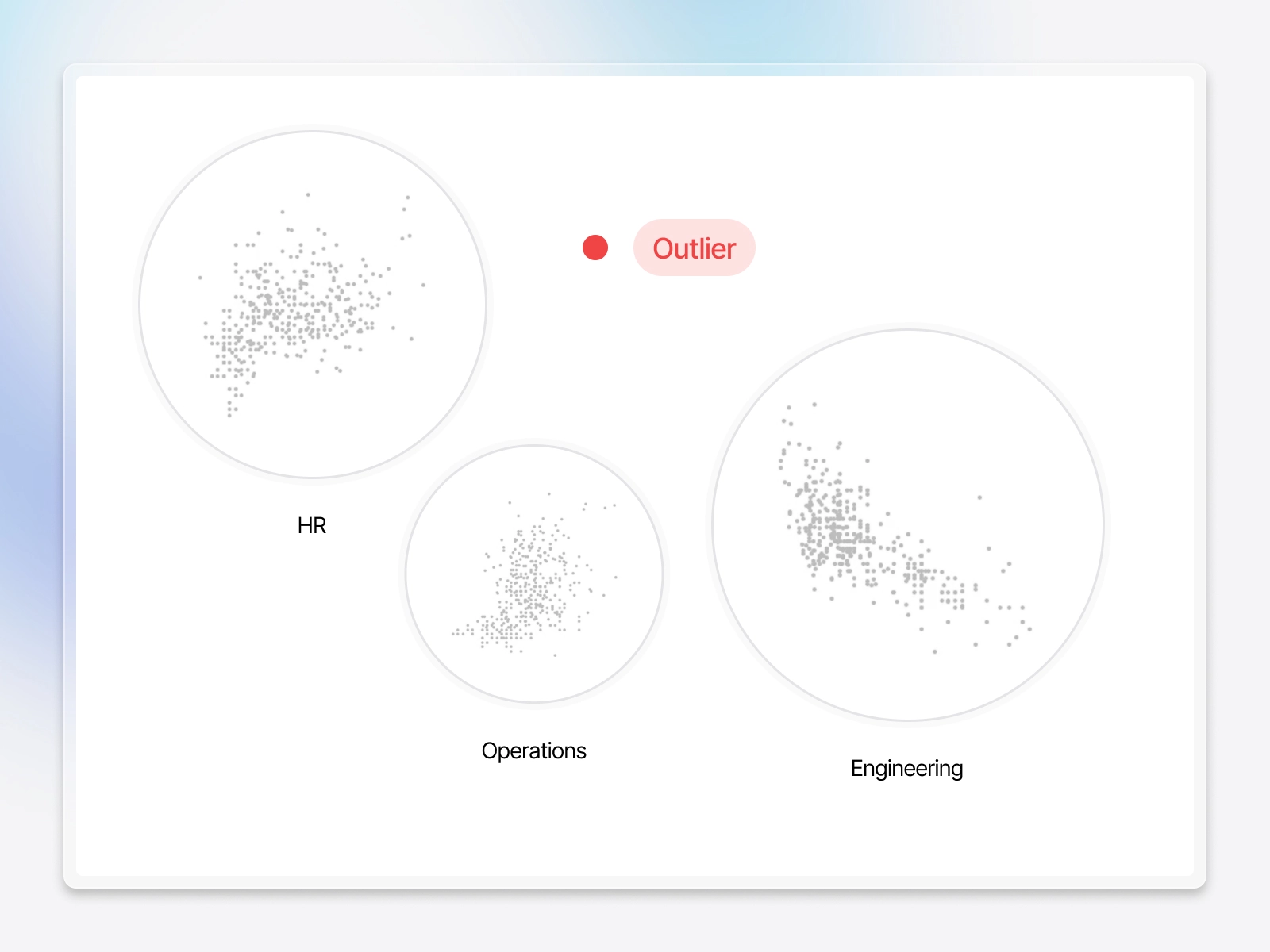

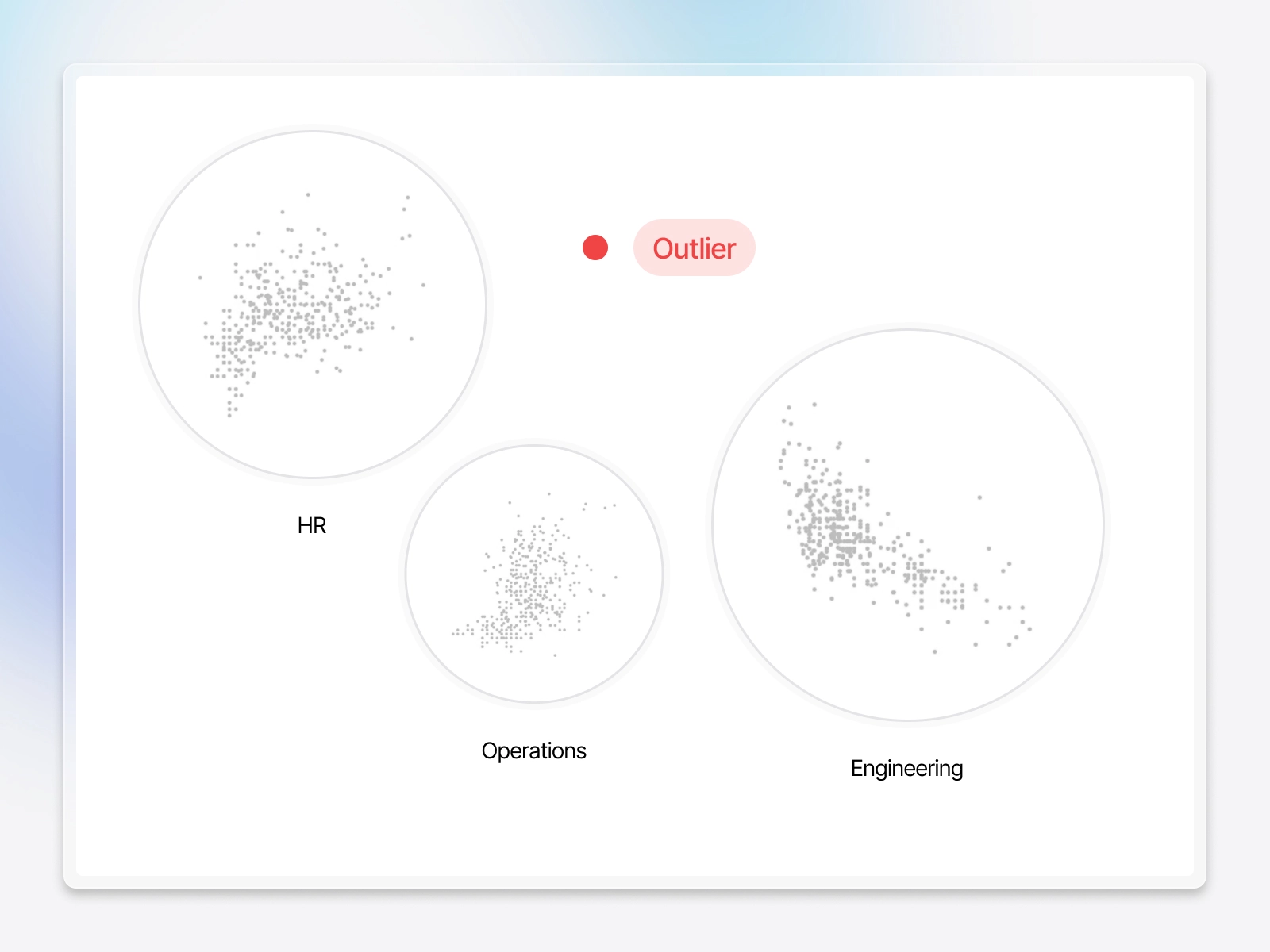

See behavior shifts before they are a real threat

The Behavioral Model continuously establishes what “normal” looks like across every entity, including users, identities, resources, and devices, and quantifies deviations using explainable anomaly scores. Rather than relying on single-dimensional baselines that generate false positives, it evaluates multi-dimensional co-occurrence across factors such as location, time of day, entity attributes, and associated risk to produce comprehensive and accurate anomaly scoring.

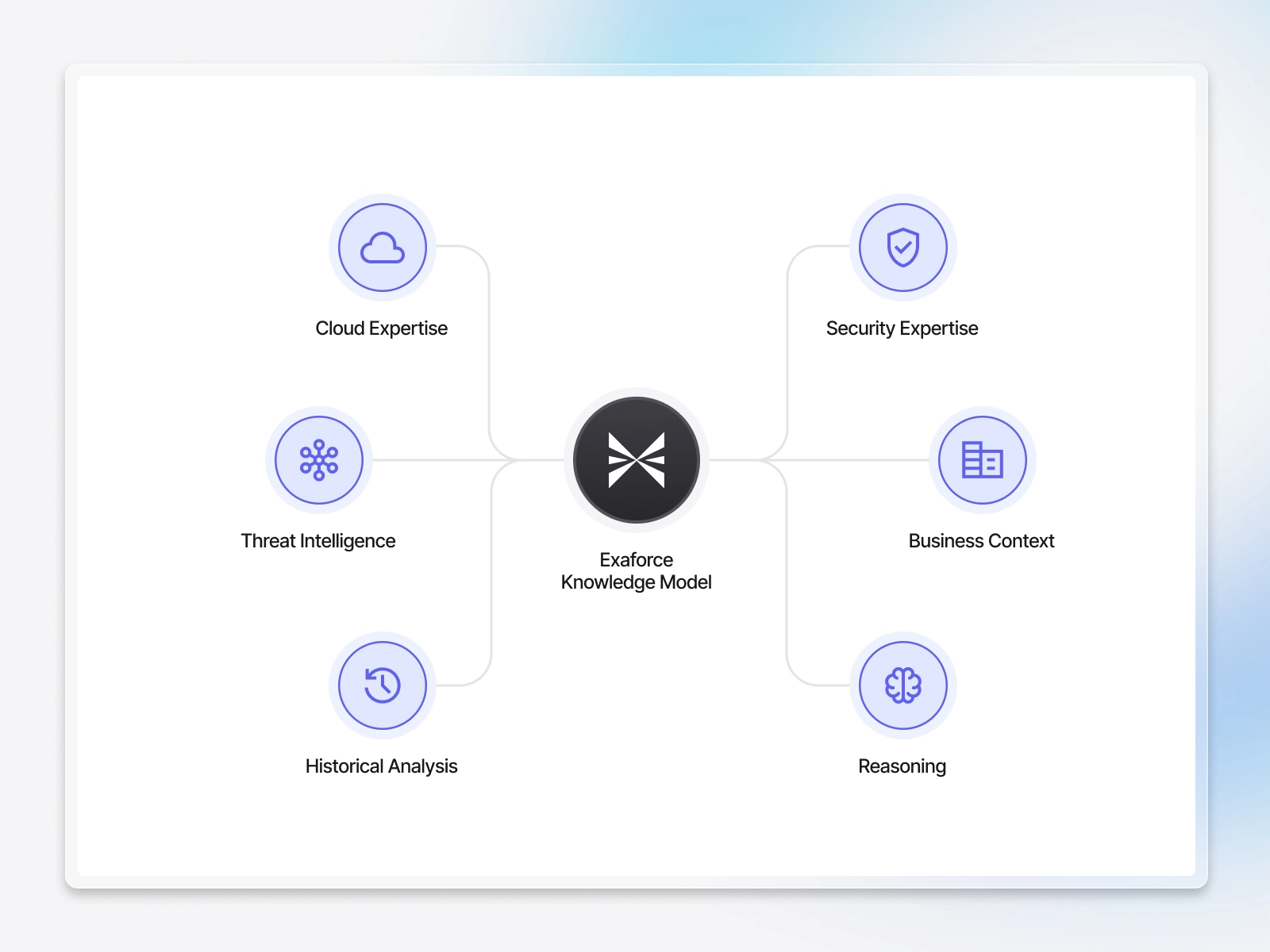

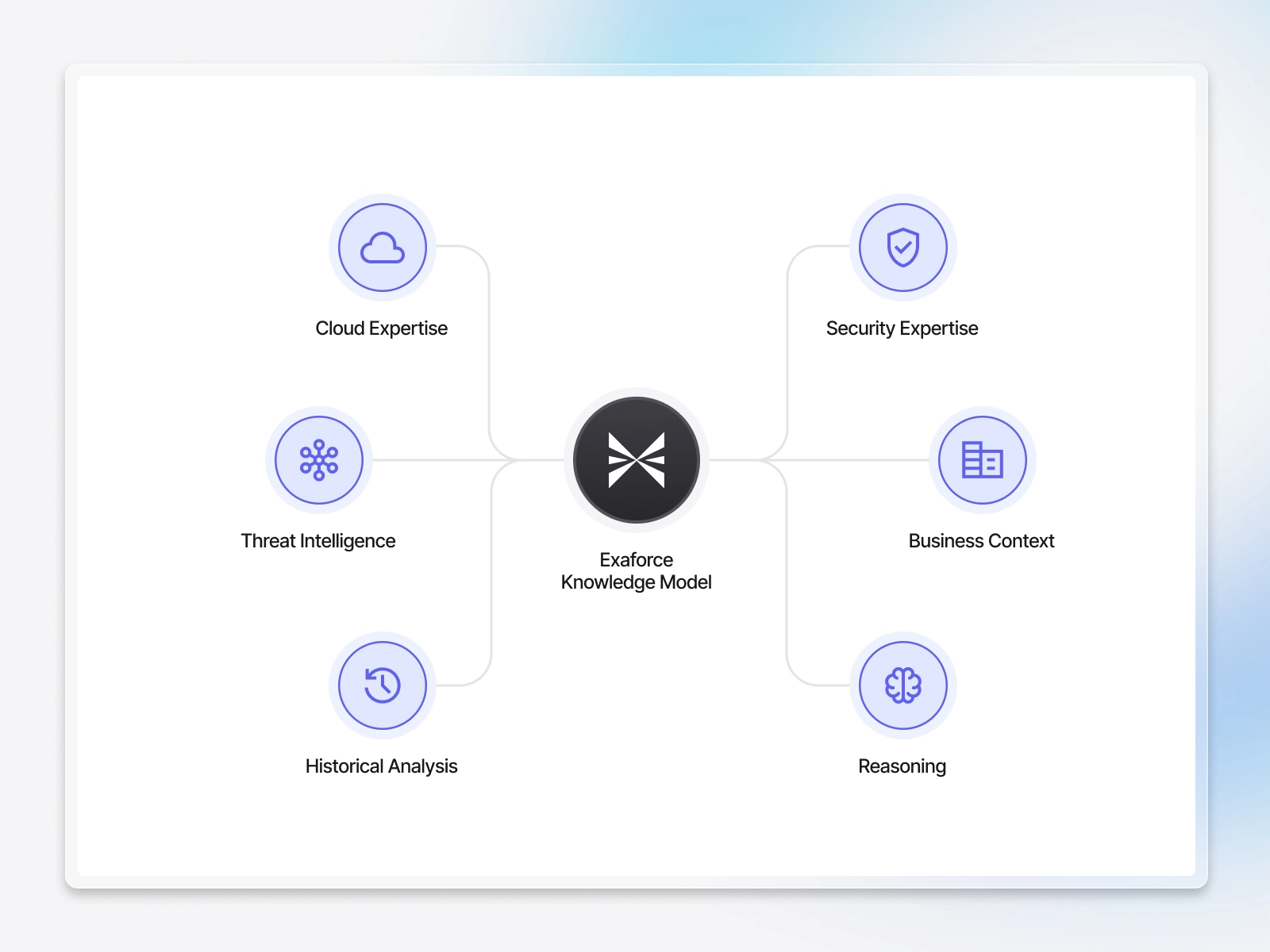

LLM reasoning backed by facts, business context, and historical outcomes

The Knowledge Model blends deep technical expertise, curated external intelligence, and intimate awareness of your environment. It draws from LLM reasoning, historic decisions, and hard-coded business rules to understand how your applications, infrastructure, and policies actually operate day to day. High-level intents are broken down into precise checks across the Semantic and Behavior layers, then reported back as clear summaries with supporting evidence, so analysts and auditors see not only the answer but the why behind it.

Deterministic and governable outcomes you can defend

Multi-model AI replaces ad-hoc prompting with governed reasoning for consistent, auditable results. The Semantic, Behavior, and Knowledge Models work within your guardrails, with explicit data scope, curated context, and full logging. You get focused, human-like interaction tailored to your business, not a general-purpose chatbot.

We believe Exaforce’s multi-model AI approach is unique in the industry and will dramatically reduce the false positives and investigation times we experience in our cloud and SaaS environments. The platform augments our SOC teams by delivering streamlined security operations and faster incident response for every client, freeing up more time to focus on proactive threat hunting.

Frequently asked questions

Explore how Exaforce can help transform your security operations

See what Exabots + humans can do for you