On a Sunday night, a senior engineer pushes what looks like a routine update to your authentication service. The change set is buried in a larger refactor, and the unit and integration tests all pass. Hidden inside is a subtle code path that accepts a hard coded bypass token and quietly logs valid credentials to an internal debug stream. Over the next week, the same engineer starts cloning dozens of repositories they have never touched before and syncing them to an unmanaged laptop, just days before announcing they are leaving for a competitor. By the time someone notices unusual access patterns in Git and a spike in anomalous authentication events, your crown jewel services have already been tampered with, and your core IP has walked out the door. This is insider risk in its most painful form: code theft and code manipulation carried out with legitimate identities, familiar tools, and activity that looks normal until you see it in the right context.

Insider risk is the risk that people with legitimate access to your systems, data, and processes will, intentionally or unintentionally, cause harm. Insider Threat is the clearly hostile subset of that risk: stealing intellectual property, sabotaging systems, or selling access. In real environments, you see the entire spectrum, including careless mistakes, compromised identities, and determined malicious insiders.

Structurally, this is different from external threat detection. External attacks normally start outside the trust boundary and work inward through vulnerabilities and misconfigurations. Insider incidents start from valid identities, approved devices, and sanctioned channels such as Git, Google Workspace, Microsoft 365, Slack, Salesforce, and cloud consoles. At the protocol level, much of the traffic looks exactly like business as usual.

Recent research on the cost of insider risks estimates average annual costs around $17 million per organization, with roughly three quarters of recorded insider incidents caused by non-malicious insiders, such as negligent or outsmarted users, rather than overtly malicious staff. Malicious insiders and compromised accounts still dominate the headlines, but the day to day cost curve is heavily influenced by ordinary people making risky decisions with powerful tools.

Three archetypes of insider risk

Most insider discussions converge on three archetypes that map well to what security teams see in production.

- Careless or overburdened user who makes mistakes or takes risky shortcuts.

- Malicious insider who deliberately abuses access for theft, sabotage, or extortion.

- Compromised identity where an external attacker controls a legitimate account.

Careless users and unintended exposure

The Toyota T-Connect incident is a textbook illustration of non-malicious insider risk. A subcontractor accidentally pushed part of the telematics service source code to a public GitHub repository, including a hard coded access key to a customer data server. That key remained exposed for nearly five years and potentially affected almost 300,000 customers. No exploit chain, no new malware strain, just secrets in code and a public repository belonging to someone who thought they were doing normal work.

Security teams routinely see similar patterns, with engineers pushing internal tools to personal GitHub for convenience, analysts exporting sensitive reports to personal cloud storage, and staff copying data to unapproved locations to meet deadlines.

Malicious insiders and retaliation

At the other end of the spectrum are insiders who understand the environment and purposefully abuse that knowledge. Just recently, CrowdStrike confirmed that an insider had taken screenshots of internal systems and shared them, along with SSO authentication cookies, with the Scattered Lapsus$ Hunters group on Telegram, reportedly in exchange for payment. The company contained the incident quickly, but it is a clear reminder that even security vendors with sophisticated controls can be targeted from within.

The Houston waste management case shows the offboarding failure pattern very clearly. A former contractor who had been fired impersonated another contractor to regain access, then ran a PowerShell script that reset roughly 2,500 passwords. The action locked out workers across the US and generated documented losses of more than $860,000. This was a retaliatory abuse of entirely legitimate tools and privileges.

Compromised identities that behave like insiders

Compromised identities blur the line between insiders and external attackers. The Ponemon study explicitly tracks outsmarted insiders, where credentials are stolen and abused, as a major category of insider incidents. In practice, these accounts behave almost exactly like malicious insiders.

Once an attacker has a valid SSO session or a working VPN credential, they can clone Git repositories, exfiltrate Google Drive or SharePoint files, execute privileged actions in SaaS platforms, and pivot through neglected service accounts. From the perspective of a SIEM, this is a series of successful logins and permitted API calls. The only realistic way to catch the activity is to notice that the pattern of behavior is wrong for that identity in its current business context.

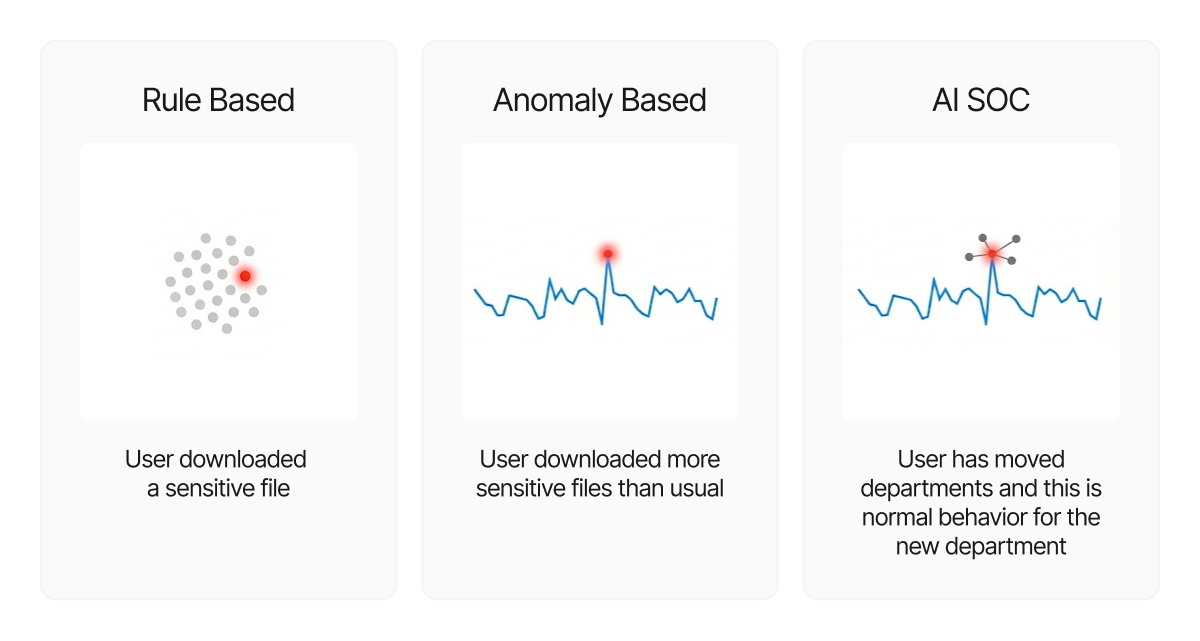

Why UEBAs and anomalies struggle with insiders

Most security architectures remain optimized for external threats, where keeping bad actors out is the primary goal. Insider threats are fundamentally harder because the difference between normal work, like running queries or downloading files, and malicious activity often lies entirely in intent rather than the action itself. This nuance forces traditional tools like SIEMs and UEBA tools into a losing trade-off of either flagging every anomaly and drowning analysts in noise, or tuning thresholds down and inevitably missing subtle, low-and-slow data theft.

AI SOC tools resolve this dilemma by removing the need to compromise on sensitivity. Instead of filtering at the detection level, these platforms can capture every suspicious signal and rely on Large Language Models (LLMs) with filtered, deep organizational and technology context. By instantaneously analyzing the "why" behind the "what," such as distinguishing a legitimate project-based spike from an unauthorized download, AI can aggressively filter false positives and surface only the narratives that represent real risk.

While UEBA and SIEM products can flag anomalies like odd login locations, they generally fail to answer the "so what?" question because the business or technology context is missing. Org charts, project assignments, and HR events typically live in separate systems, meaning security teams only see the full picture during a manual investigation rather than at the moment of detection.

How insider risk appears in telemetry

To move from abstract principles to real controls, it helps to examine specific behaviors and how they appear in logs. The following scenarios combine careless, malicious, and compromised insiders and map directly to detection patterns that a security platform should support.

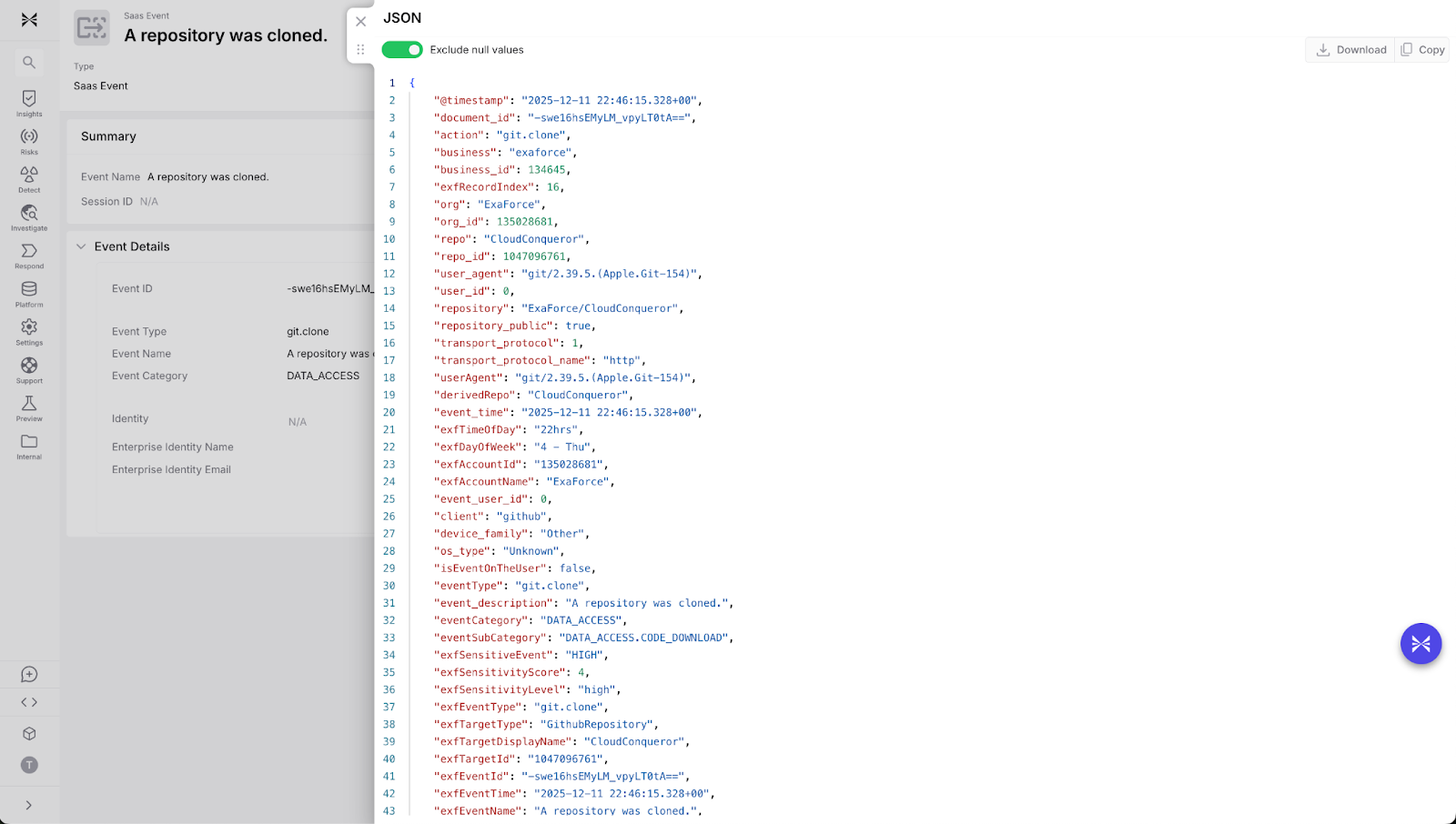

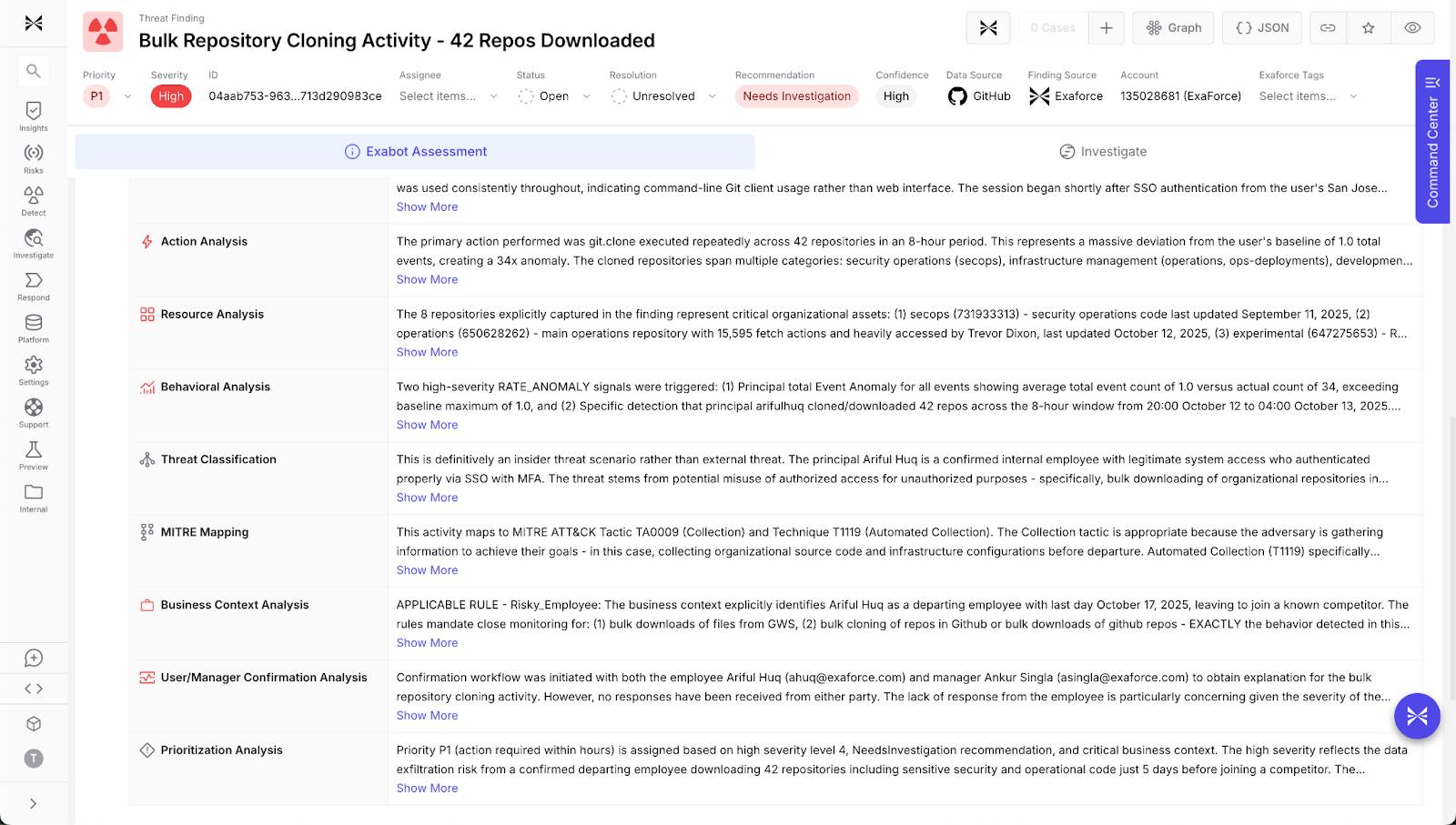

Unusual source code access and cloning

Consider an engineer who plans to leave and begins cloning many repositories that they have never touched before, including high sensitivity services. Or a compromised SSO session that is used to script bulk git clone operations from build runners.

In telemetry, you see a burst of Git access from a single identity, often to repositories outside the user’s historical working set. The protocol is healthy. The pattern is not.

Effective detection dynamically learns baseline access patterns for each user and peer group to identify sharp deviations, particularly against repositories tagged as sensitive or restricted. To distinguish real risk from noise, the system constructs a cohesive incident narrative that fuses technical telemetry from Git and SSO logs with critical business context.

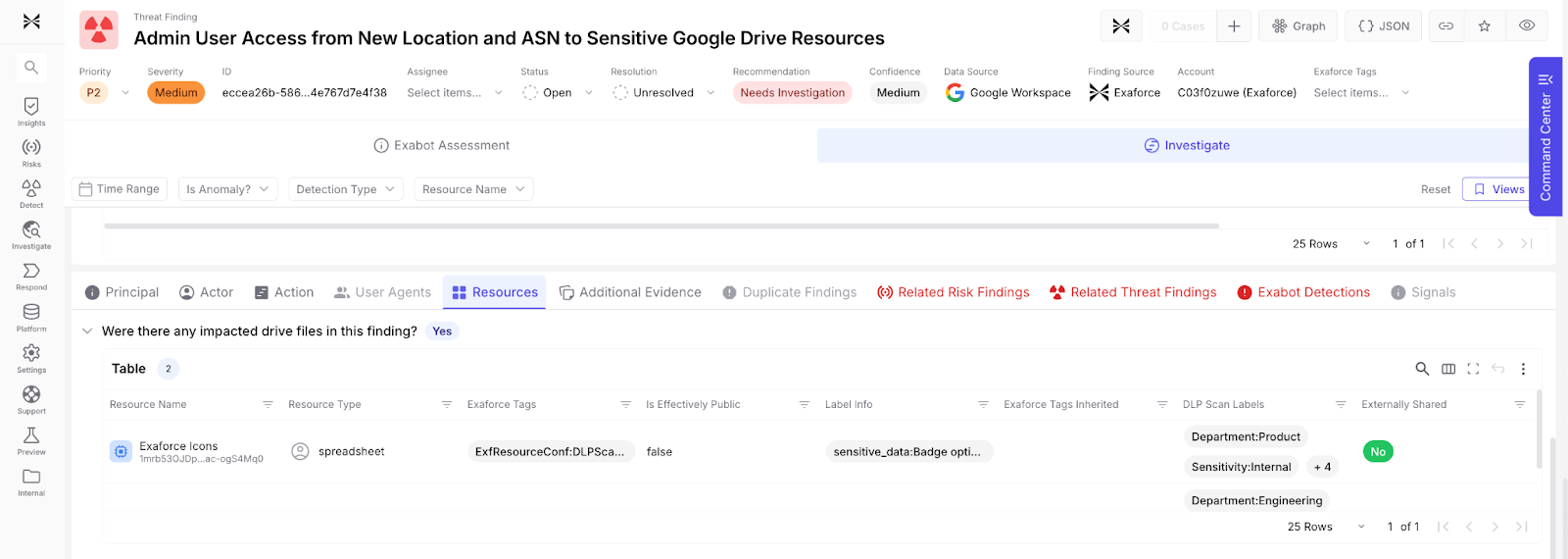

Mass download of documents and SaaS data

Sales leaders exporting entire opportunity histories, finance staff pulling years of ledger data, and compromised accounts bulk downloading Drive or SharePoint files are recurrent patterns in insider investigations.

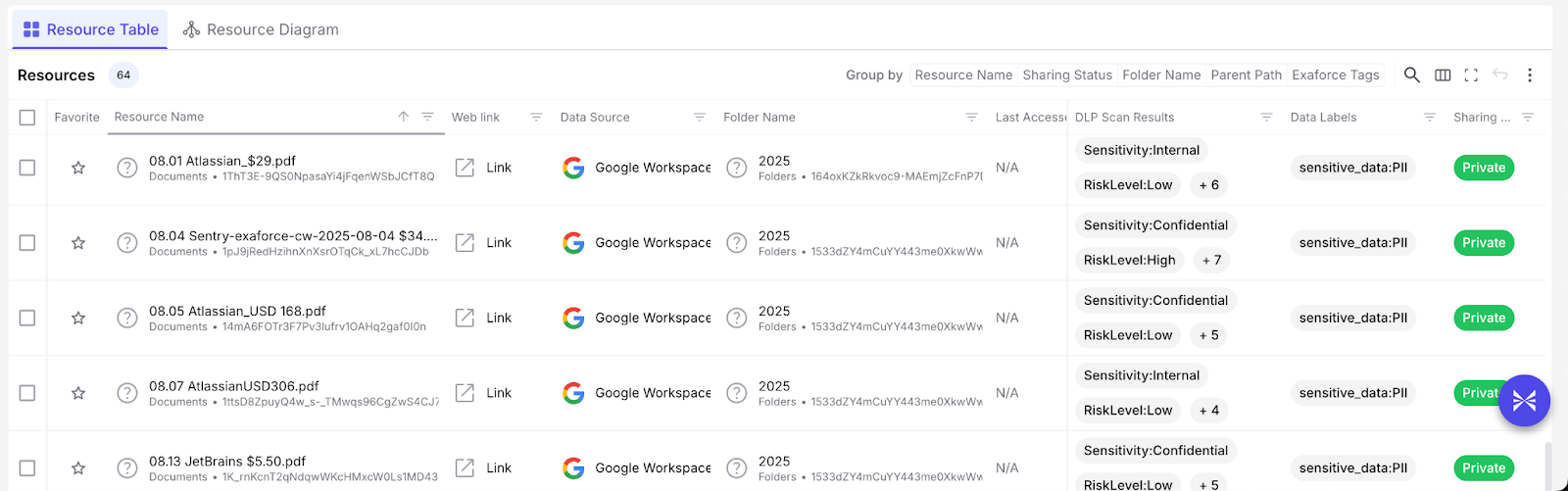

In logs, this appears as a combination of SaaS audit events for bulk exports, report downloads, and administrative actions, plus sudden spikes in document access and sync activity. When data classification from systems such as Google Cloud DLP is present, you can weight events by sensitivity instead of just counting objects.

The difference between noise and value usually lies in baselines and context. A good platform knows what quarter end access looks like for finance, and what abnormal hoarding looks like for a departing engineer, and it understands which objects are payroll files or IP heavy design documents.

Activity spikes around offboarding and org changes

The Houston contractor case is one end of the offboarding spectrum. Many smaller but still serious incidents involve leavers quietly stealing documents, internal transfers retaining legacy accesses, or contractors continuing to log in long after their contracts end.

The critical signals here include HR data such as resignation dates and contract end dates, identity system records for account status, and any anomalies in privileged actions or data access. A good security platform enables companies to add context rules to mark leavers and movers with elevated sensitivity, raises the priority of anomalies that occur in the weeks around those transitions, with recommended mitigations.

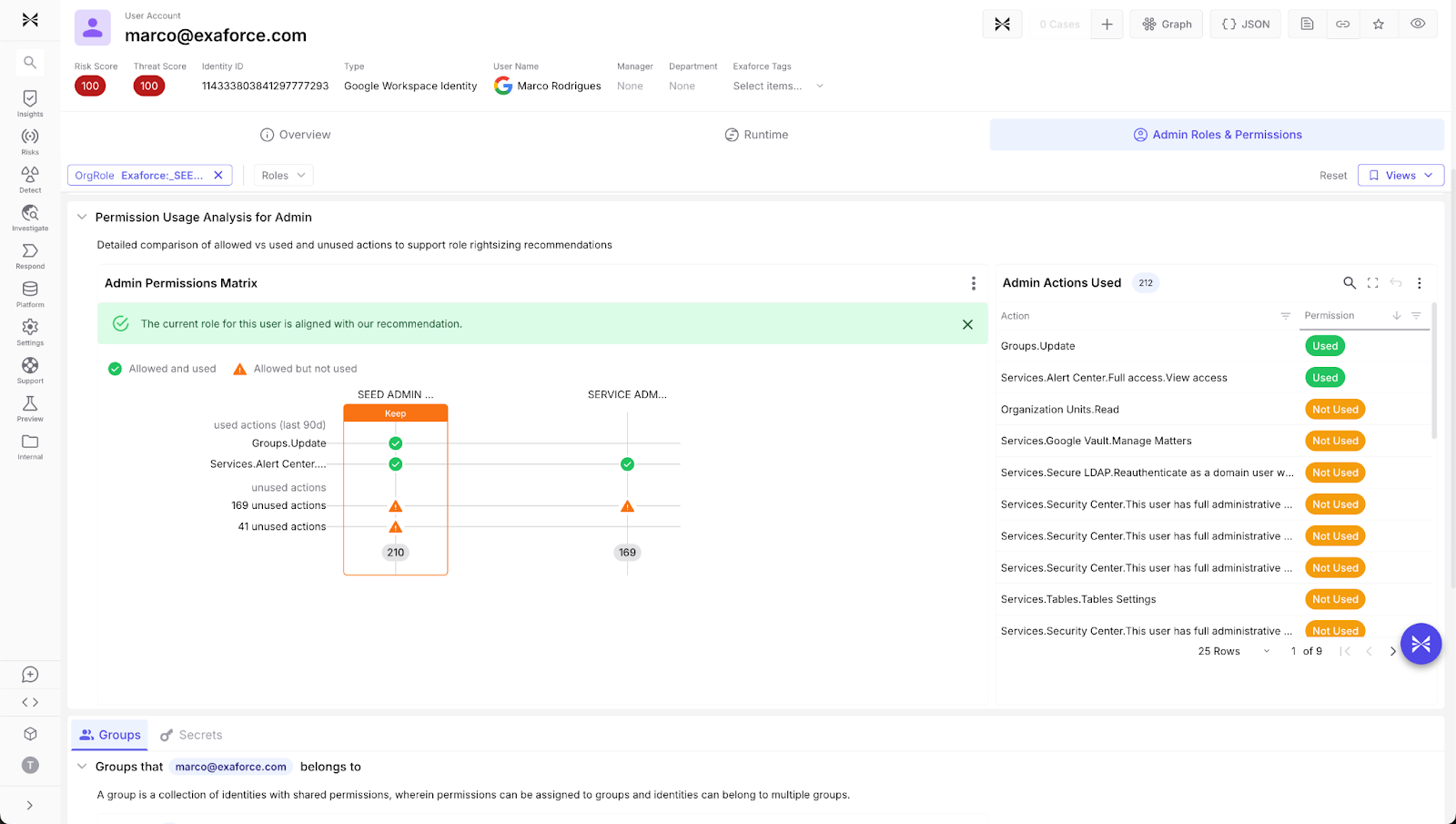

Dormant and overprivileged accounts

Dormant or poorly scoped accounts can turn relatively minor compromises into wide scale damage. Typical examples include service accounts with domain wide privileges that are rarely used, break glass admin accounts with no clear owner, or former employee accounts that remain active in one or two SaaS platforms.

Identity inventories, IAM audit logs, and privilege graphs can reveal accounts that almost never do anything yet have broad entitlements. Once any of those accounts start to show unusual logins, new locations, or high impact actions, you have an insider style incident, regardless of whether the operator is internal or external.

Security platforms should continuously surface dormant high impact identities, feed them into offboarding and privilege review processes, and treat new activity from those accounts as a high priority signal.

The blueprint for insider-aware security

Security platforms that detect insider risk need to focus on how identities interact with sensitive assets over time.

It ingests relevant telemetry from identity providers, IAM systems, SaaS platforms, collaboration tools, developer tooling, and, where practical, endpoints and networks. The goal is not to hoard logs but to reconstruct who did what, where, and to which data.

It fuses data classification output from systems like Google Cloud DLP, so that every detection can weigh both behavior and object sensitivity.

It builds and maintains baselines and peer groups as first class entities. These baselines feed detection logic directly. The system can distinguish between a senior engineer ramping up in a new codebase, which looks like exploration, and the same engineer stealing IP before departure, which looks like exfiltration.

It supports adding context to encode local knowledge, such as which VPN locations are expected and which employees and contractors are high risk.

Finally, it focuses on incidents rather than events. Instead of presenting dozens of loosely related alerts, it aggregates activity into narrative cases, such as a departing engineer cloning new repositories, downloading confidential documents, and changing access controls in the same time window.

Exaforce’s approach to insider detection and investigations

In Exaforce, these are delivered as specific, concrete capabilities within a unified platform that supports the full SOC lifecycle. The platform accurately detects, triages, and contextualizes not only insider threats but all threats, and it provides clear, guided response recommendations with optional automated remediation.

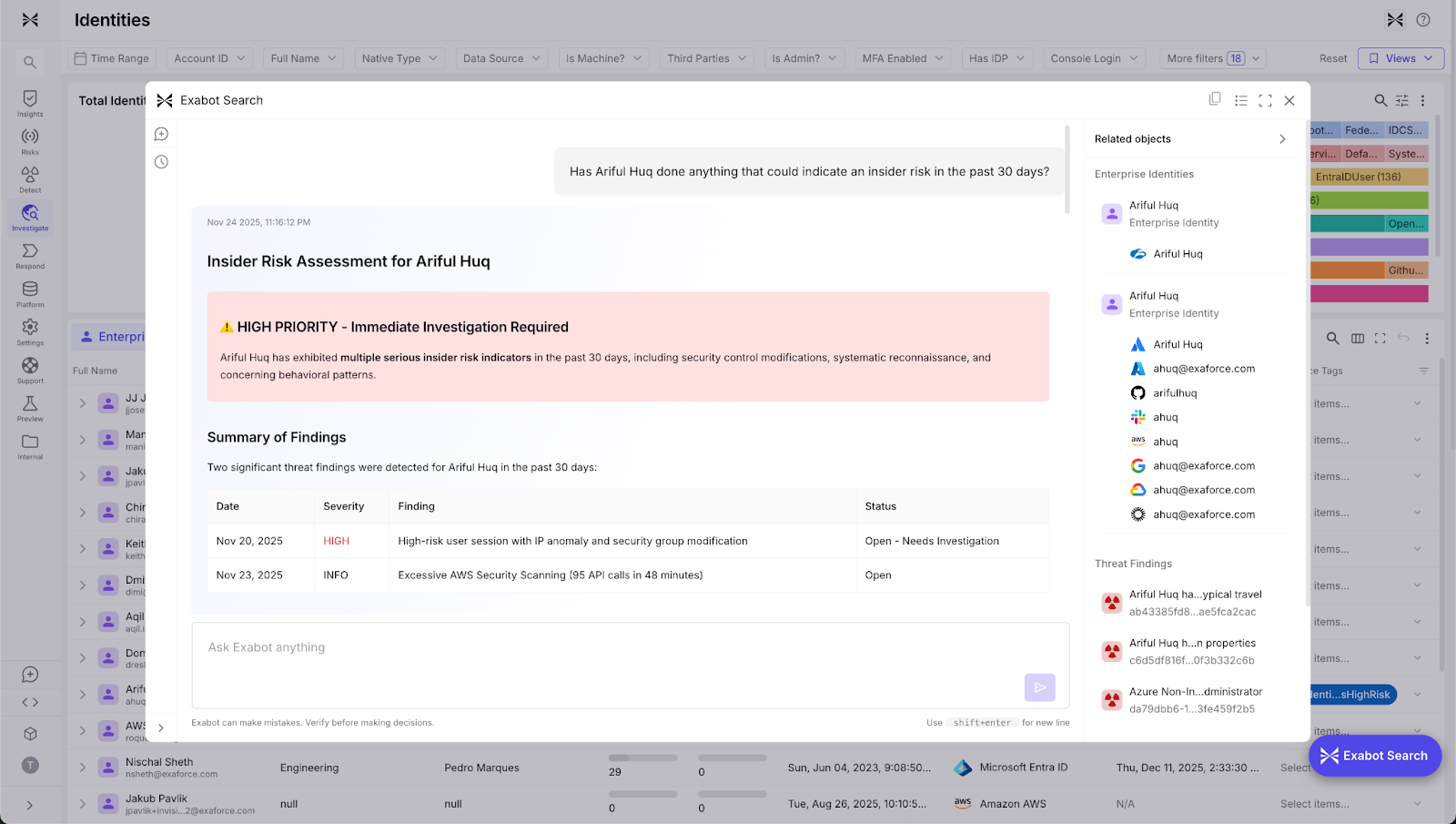

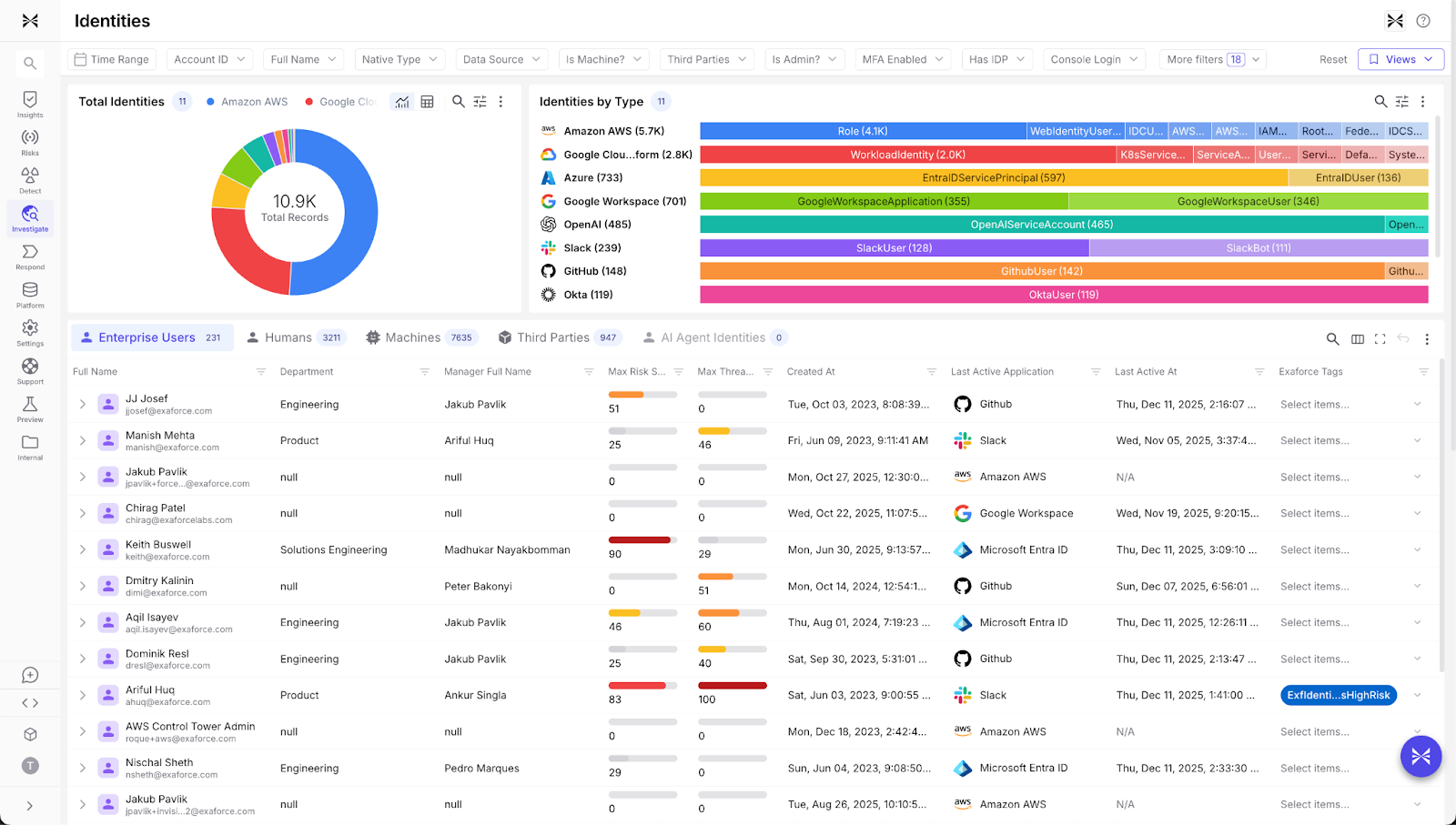

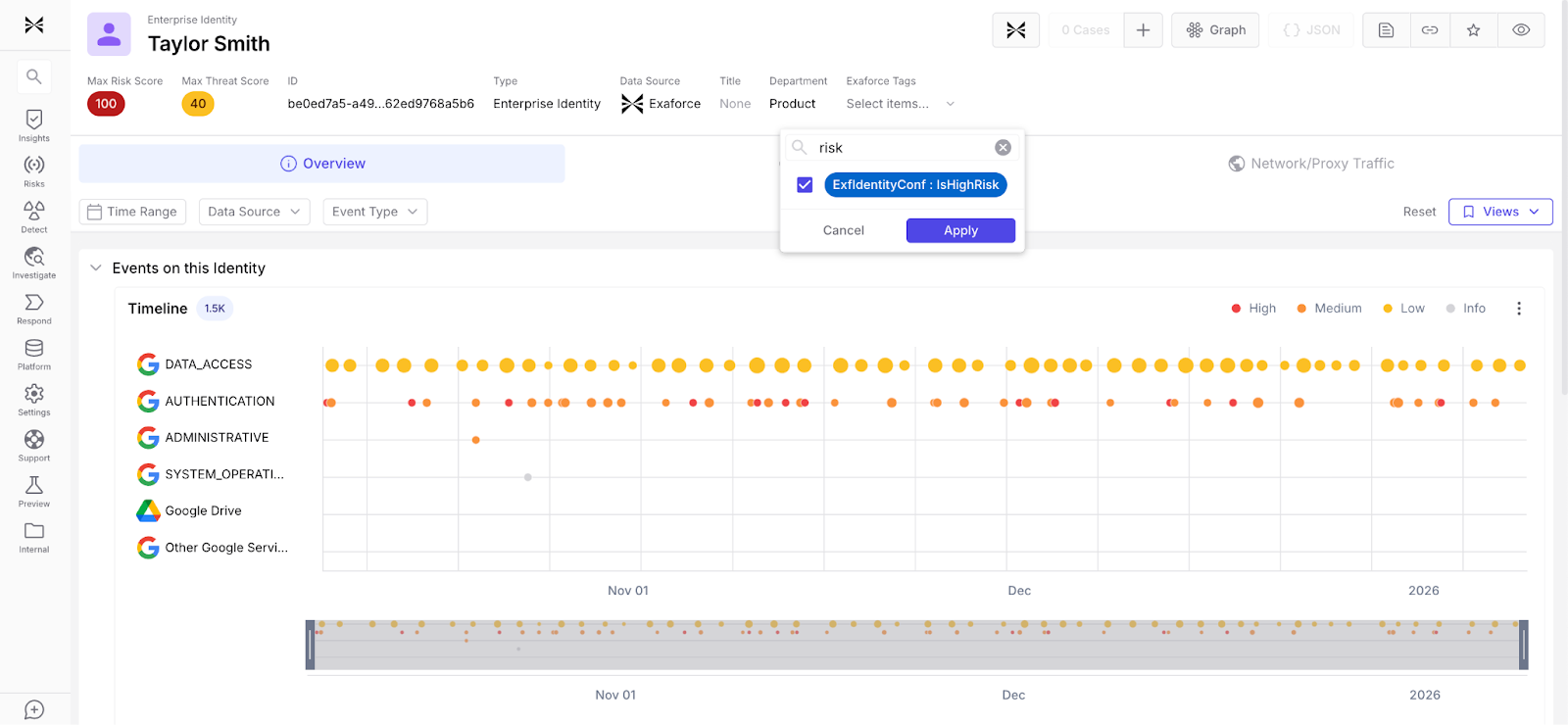

Threat Findings based on semantic connections and behavioral baselines

Exaforce generates threat findings based on behavioral baselines for users, accounts, departments, peer groups, and the entire company across domains such as code usage, SaaS document access, cloud IAM activity, and administrative operations. When someone suddenly clones many repositories they have never touched before or downloads an unusual volume of Google Workspace files, the system raises a threat finding instead of hundreds of isolated alerts. This baseline-driven approach dramatically reduces alert fatigue while ensuring that genuinely suspicious deviations are surfaced with the full context analysts need to make rapid decisions.

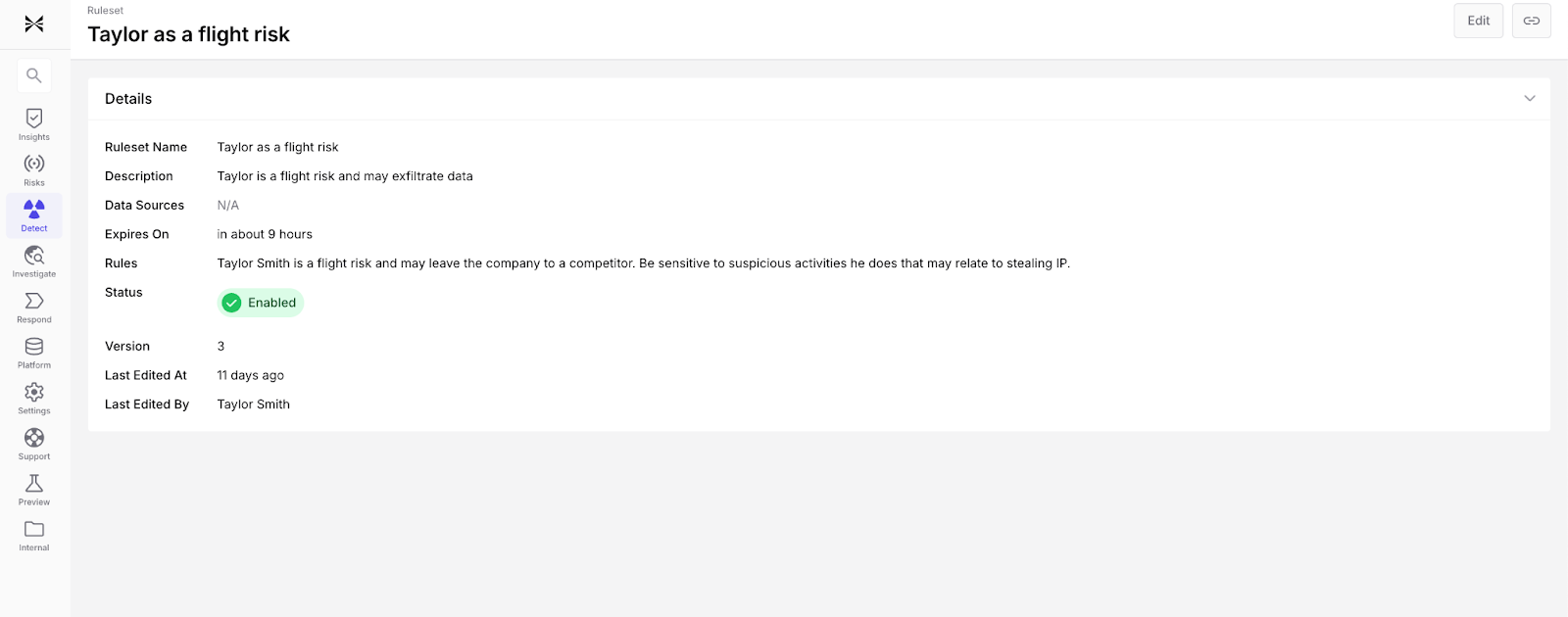

Business Context Rules for organization specific context

Exaforce’s Business Context Rules allow teams to add context about specific people or teams to Exabot's investigation context window. For example, contractors approaching end dates can be monitored more closely, admin actions from unusual VPN locations can be treated as higher risk, and thresholds can be relaxed during sanctioned bursts such as quarter end or PTO dates. This flexible rule system enables security teams to encode organizational knowledge and business logic directly into detection logic without waiting for long learning cycles.

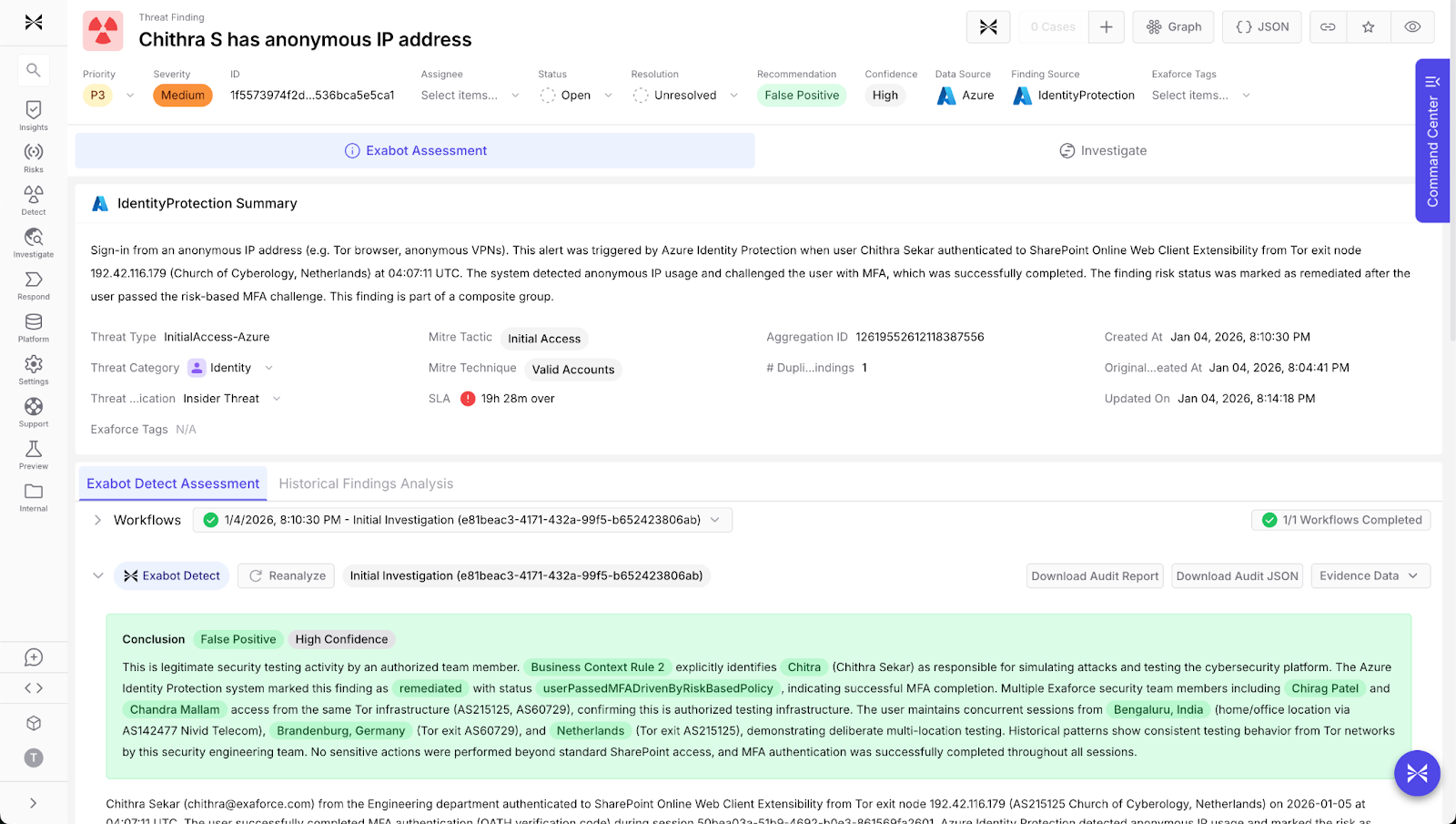

Automatic noise reduction and false positive suppression

Exaforce’s automatic noise reduction and false positive suppression are based on deep business, technological, and historical knowledge and are tailored to every customer. Additionally, Exaforce collapses related signals into a single case that already contains the necessary context for triage and escalation. By intelligently aggregating correlated events and filtering out known benign patterns, teams can focus investigative resources on the small percentage of alerts that represent genuine risk.

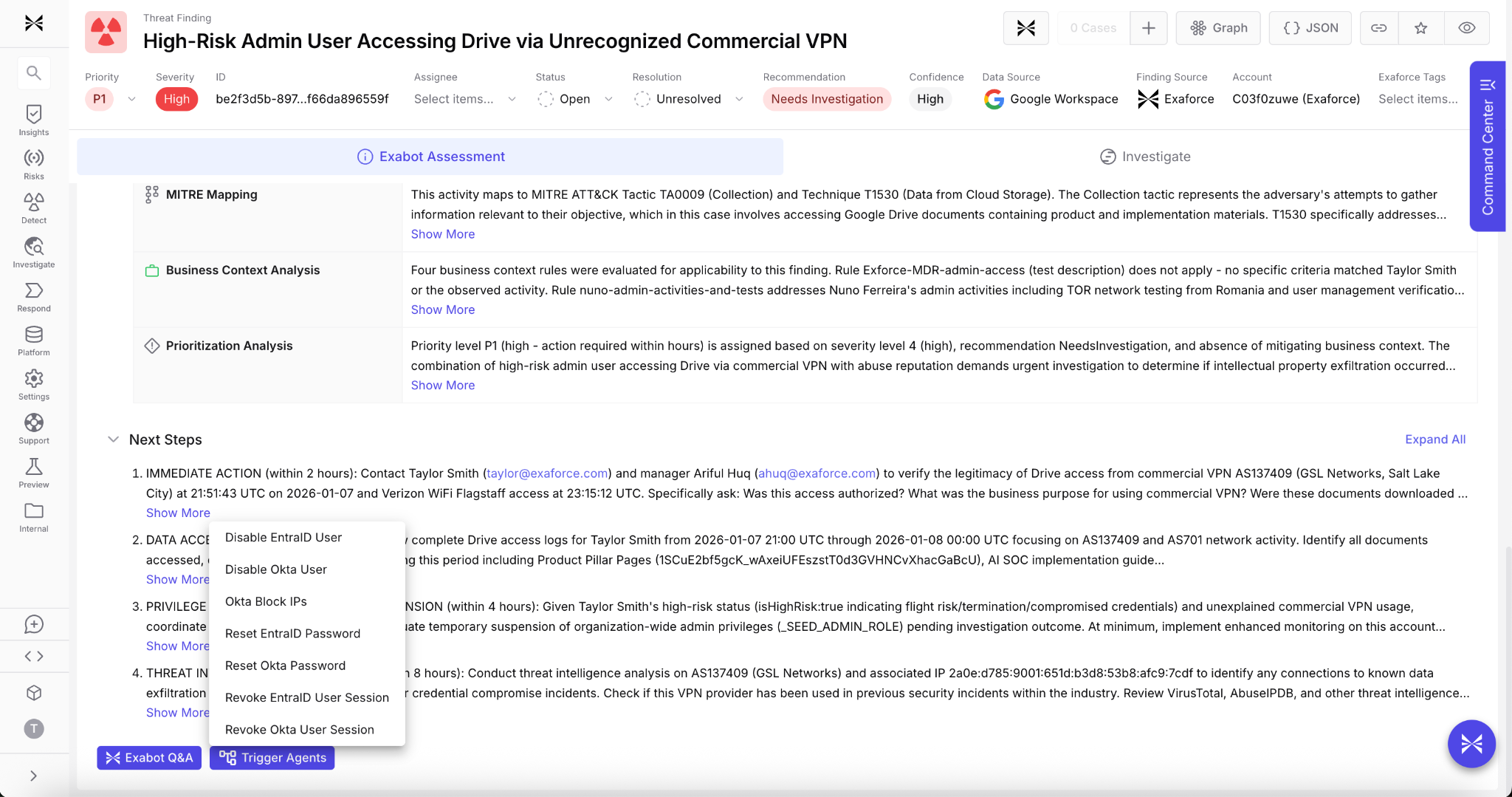

Automated and triggered response actions

Exaforce enables security teams to quickly respond to insider risk incidents through both automatic and human-triggered response actions that execute immediately or escalate for approval. When Exaforce detects a departing employee cloning sensitive repositories, the platform can automatically confirm the behavior with the user and their manager, trigger a periodic report summarizing their activity, or suspend their access pending investigation. For scenarios requiring human judgment, Exaforce supports approval workflows where analysts can review recommended actions and execute them with a single click. Teams can leverage out of the box response actions for common insider scenarios or build custom Automation Agent workflows that orchestrate multi-step responses across identity providers, SaaS platforms, and communication tools.

Simplified insider threat investigations and threat hunting

Exaforce simplifies insider threat investigations through the Data Explorer's BI-like interface and Exabot Search's natural language queries, enabling security analysts to rapidly pivot through identity timelines, access patterns, and asset relationships. Frontline analysts can investigate potential insider incidents immediately without writing complex queries or waiting for specialized threat hunting experts.

Continuous detection of unused or overprivileged accounts

Exaforce’s continuous detection of unused or overprivileged accounts that still have wide effective access feeds both into insider risk detections and into remediation workflows that reduce blast radius and tighten offboarding. By proactively identifying dormant credentials and excessive permissions before they can be exploited, organizations can shrink their attack surface and prevent incidents rather than merely detecting them.

High-risk user tagging for proportionate monitoring

Exaforce allows security teams to tag specific users as high risk, such as departing employees or external contractors, triggering heightened sensitivity of their activity. This additional context ensures the Knowledge Model applies proportionate scrutiny where it matters most, catching suspicious behavior from sensitive accounts without overwhelming analysts with alerts from the broader user population.

Leveraging native context from SaaS platforms

Exaforce integrates native data classification labels from systems like Google Workspace, automatically ingesting existing sensitivity markers. These labels ensure that detections and risk scores always reflect whether the files or assets in question contain sensitive information like confidential or PII data. By integrating directly with existing data classification systems, Exaforce eliminates the need for redundant labeling and ensures risk assessments automatically reflect the true business value and sensitivity of every asset.

Comprehensive data source coverage across SaaS, cloud, identity, and AI platforms

Exaforce ingests telemetry from the full spectrum of modern enterprise platforms, including Google Workspace, Microsoft 365, Slack, GitHub, GitLab, Salesforce, Okta, AWS, Azure, GCP, OpenAI, and dozens of other SaaS, identity, and cloud services. This breadth of coverage ensures that insider risk detection isn't blind to critical activity happening across fragmented tool stacks, enabling the platform to correlate suspicious behavior across every system where identities operate and sensitive data lives.

Insider risk as a security discipline

Insider risk has evolved from an edge case in security planning to a central operational concern. At $17 million per organization annually, with three quarters of incidents stemming from non-malicious actions, the threat is both expensive and ubiquitous. Every legitimate credential now controls access to cloud environments, SaaS platforms, and code repositories that can cripple a business if mishandled, making the challenge as much about ordinary employees making mistakes as it is about deliberate sabotage.

Treating insider risk as a first class discipline means investing in both a cross functional program and a context rich platform. The program defines what unacceptable behavior looks like in your organization, how you balance monitoring effectively, and how you respond when signals appear. The platform gives you the telemetry, classification, baselines, and business context to see those signals early and act consistently. This is where the fundamental shift from traditional security tools to AI-powered platforms becomes critical. Legacy SIEMs and UEBAs can't distinguish between a finance analyst preparing quarter-end reports and that same analyst exfiltrating customer data before departure. Exaforce addresses this challenge by treating context as a first-class feature, fusing behavioral baselines with business context rules and data classification to accurately detect insider risk patterns that would be invisible to tools that only see technical anomalies.

The enterprises that will fare best are those that start building insider visibility and muscle memory now, while the choice is still voluntary and not yet dictated by a major incident. Insiders, whether careless, compromised, or malicious, will always have paths to your valuable systems and data. The question for security and risk leaders is whether the organization can recognize the moment when ordinary access stops looking ordinary and starts to look like real risk, and whether they have a platform capable of making that distinction at scale.