The past, present, and future of security detections

Modern detection goes beyond static rules by understanding the signals that reveal intent, context, and emerging threats.

Detection engineering has always been the heartbeat of the SOC, but it is increasingly struggling under pressure. Security teams face an avalanche of alerts, most of which are false positives, while maintaining even a modest set of detections has become an endless cycle of tuning and upkeep. Complex anomaly and behavioral alerts are notoriously difficult to write and validate, and when the environment shifts, the rule has to be redesigned, leaving detection engineers buried in maintenance rather than advancing coverage or integrating new data sources. At the same time, the modern enterprise spans IaaS, SaaS, IdPs, and more, a sprawling, interconnected attack surface that overwhelms traditional rule-based logic.

In many teams, the decision comes down to disabling detections, either because they are too noisy or because they don’t have experts on hand to write/tune them, or noisy alerts that overwhelm analysts, both of which have grave consequences.

Today’s noisy SOCs are the product of how detection logic has evolved and, in some ways, how it hasn’t. To see why, let’s revisit the foundations of SIEM detection itself.

A brief history of SIEM detections

Detection rules have always been the foundation of Security Information and Event Management (SIEM) tools. In fact, security detection tools predate the 2005 introduction of the term SIEM by Gartner. The roots of modern detection techniques trace back to DARPA- and SRI-funded expert systems of the 1980s and 1990s, such as IDES, NIDES, and EMERALD, which pioneered an inference based approach for intrusion detection. These systems maintained a working memory of facts and applied inference engines using forward- and backward-chaining logic to reason about user behavior and anomaly likelihood, dynamically adjusting confidence levels as evidence accumulated. When enterprise data volumes exploded in the early 2000s, this symbolic reasoning evolved into a more scalable, event-driven approach: the correlation engine. Early SIEMs translated the logic of expert reasoning into deterministic, pattern-matching rules optimized for throughput, detecting known malicious relationships across massive log streams to detect threats rather than deriving new hypotheses about system state.

Over the next two decades, the standard rule-based approach proved powerful but lacking. Its inability to recognize patterns over longer periods of time and to identify outliers sparked the need for a more statistical approach. The introduction of User and Entity Behavior Analytics (UBA/UEBA) in the mid-2010s re-injected statistical modeling and machine learning, allowing SIEMs to spot deviations from baselines rather than depend solely on handcrafted rules. This marked a key turning point from deterministic correlation toward probabilistic and behavioral detection. Meanwhile, Security Orchestration, Automation, and Response (SOAR) and Endpoint/Extended Detection and Response (EDR/XDR) tools expanded the operational boundaries of detection, connecting more telemetry, normalizing signals across domains, and streamlining response, but the core logic remained largely pattern- or behavior-based, which continued to lead to untenable false positive rates and heavy maintenance requirements.

The Exaforce approach to detection

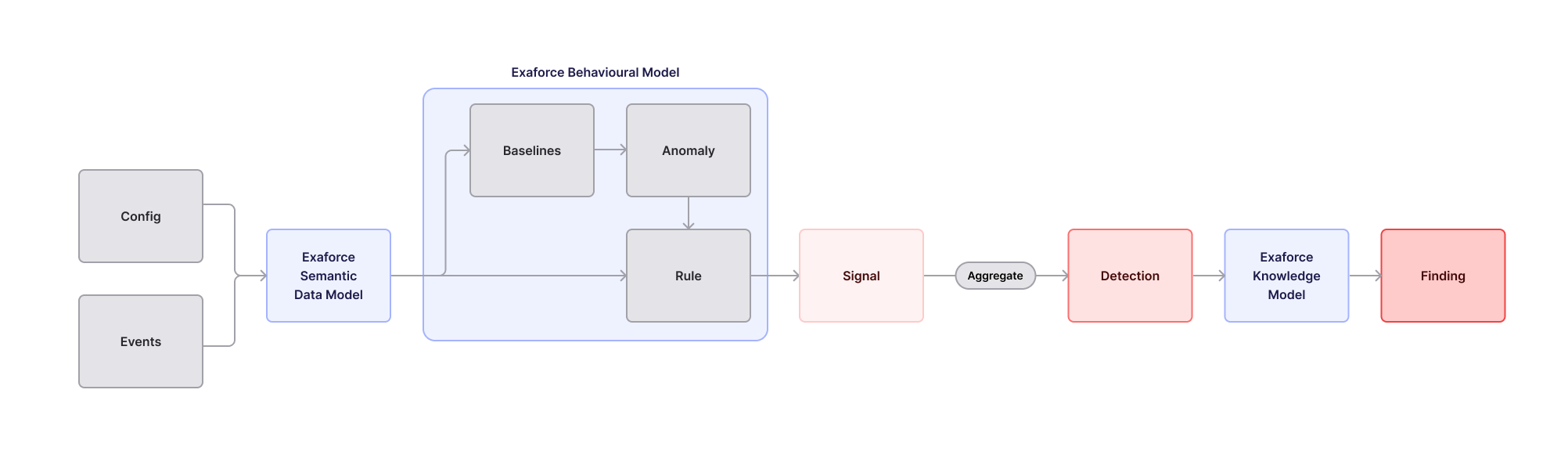

Taking inspiration from the aforementioned expert systems, Exaforce employs a multi-tier detection architecture designed to balance volume and fidelity.

The first tier starts with the semantic data model taking in log and config data, and processing it for subsequent consumption. The next phase is the behavioral model. This consists of high-volume, baseline- and anomaly-driven alerts that monitor activity across numerous behavioral dimensions like user, account, peer group, organizational levels, and day/time, as well as metrics like event frequency, access rates, or location patterns that, in combination, trigger a rule. The outputs of the behavioral model are Signals. Each signal represents a medium-fidelity indicator of suspicion, constantly tuned and optimized.

A single signal may encapsulate multiple underlying baselines. For example, “User connecting from a never-before-seen ASN” and “User typically connects from a cloud ASN but now appears from a non-cloud ASN” are distinct baselines that both contribute to an ASN Anomaly signal.

While most signals are anomaly-based, they can also originate from deterministic rules, such as access from banned countries or execution of sensitive actions.

These rules and anomalies may also be based on configuration information about the resource or actor. For example, different baselines for machine vs human users, or for admins vs non-admins.

Signals are then aggregated into detections by correlating them across time windows or sessions. When the aggregate signal strength crosses a threshold, the detection proceeds to the next stage of the detection pipeline, where higher-order reasoning and contextual enrichment refine it into a high-fidelity, actionable finding.

This stage uses Exaforce’s knowledge model to evaluate each detection, adding various elements of context to properly assess the detection and its signals. Context added here includes things like;

- Configuration information about the system, resource, user, etc.

- Business context

- Similar historical findings

- Related alerts that are part of an Attack Chain

- Historical baseline information about the user, system, and resource

In conjunction with this additional context, the knowledge model assesses the signals and makes a determination about whether this is likely a false positive or not. Users are only notified about true positives, which are added to the queue, while false positives are preserved in a separate section for forensic threat hunting.

Example where the signal approach outperforms the traditional approach

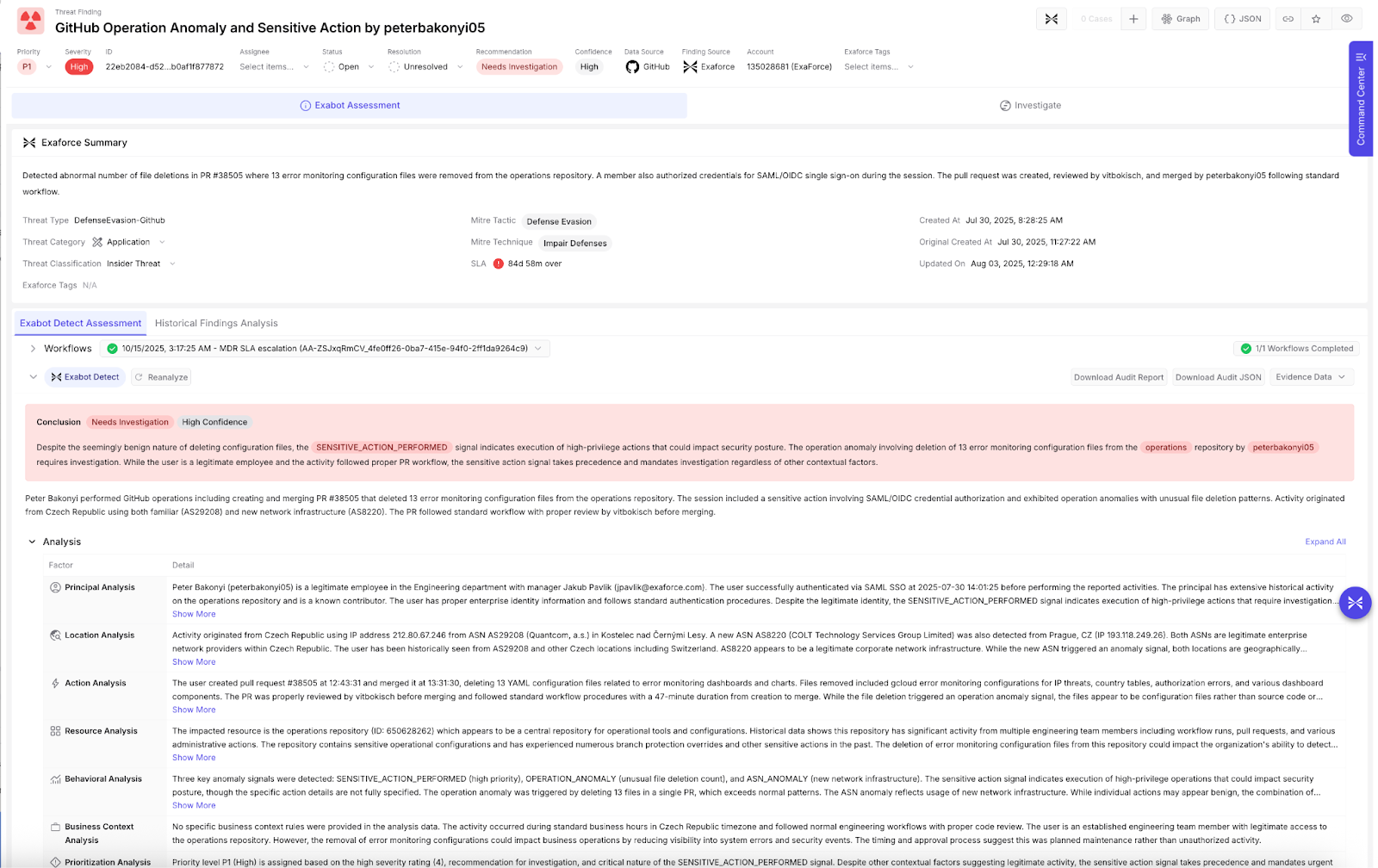

In the example below, a GitHub user deleted 13 configuration files for error monitoring dashboards and alerts. As a result, three signals were generated by the Exaforce behavioral model:

- Sensitive Action: triggered by the deletion of error-monitoring configuration files.

- Anomalous ASN: a new ASN was observed for this user.

- Operational Anomaly: an unusually high count of file deletions for any user in the organization.

The ASN and Operational Anomaly signals were produced through baseline deviations, while the Sensitive Action signal originated from a more traditional rule. Because all three occurred within a single user session, they were aggregated into a single detection.

During the subsequent Knowledge Model phase, additional context about the user, peers, and historical baselines was applied. Exabot observed that this user is typically seen connecting from IP 212.80.67.246 under ASN AS29208 in the Czech Republic. In this instance, the user appeared from a new ASN, but it is commonly used within the organization. Based on this context, Exabot concluded that the ASN signal was likely non-material, while the file deletion activity remained notable. The combined assessment was then promoted to a Finding, with an explanation summarizing Exabot’s reasoning, and a structured data dashboard to supplement.

In a traditional SIEM, this scenario would have required at least three separate correlation rules and multiple baselines. Building each baseline and then the corresponding correlation rules would have been a significant task for the detection team. Each alert would have fired independently, with no default mechanism to aggregate them. Analysts would have needed to manually investigate whether the new ASN was benign or suspicious, unless the detection team had preemptively excluded all known corporate ASNs, a brittle and maintenance-heavy approach. By contrast, in the Exaforce model, the ASN rule can remain broad, since later contextual enrichment intelligently distinguishes benign deviations from true threats, maintaining visibility without generating noise. Finally, the natural language summarization of the finding and context would have had to be inferred by an intelligent analyst versed in the alert structure and data.

Why this matters

The Exaforce approach builds on the foundational constructs of early SIEM and intrusion detection systems but modernizes them to the scale, precision, and adaptability of modern behavioral and agentic models. The high-volume anomaly tier ensures that every meaningful outlier is detected. There is no penalty here for a noisy alert since they are filtered by the knowledge model before reaching the user, and therefore, rules don’t have to be hyper-tuned and can still provide valuable signals. For many SOC teams today, detections can only be enabled if their output volumes are manageable by the analyst team, and as a result, many lower fidelity alerts are either disabled or must be heavily tuned. Exaforce’s behavior model inverts this paradigm, encouraging any low fidelity indications to be enabled and allowing the remaining process steps to assess their fidelity and contextualize them. This materially unburdens the SOC team -not just at the analysis level, but also at the detection engineering stage, as rules do not have to be tuned, disabled, or caveated.

A similar approach would have been prohibitively expensive and overwhelming for even the most mature SOC teams to evaluate manually in traditional systems. Exaforce’s multi-tier architecture ensures that only high-fidelity, context-aware findings reach the analyst, dramatically reducing noise without sacrificing security.

While some legacy SIEMs have attempted multi-layered detection, they often become brittle and cumbersome, placing the burden on the SOC’s detection engineering team to manage an ever-growing web of baselines and rules. In the Exaforce model, this complexity is handled automatically by Exaforce’s own expert systems and continuously managed knowledge models. Teams can still add or modify detections as needed, but by default, tuning, baselining, and correlation are intelligently maintained by Exaforce.

This architecture of managed systems, high volume, low fidelity alerts, and multiple levels of aggregation and context can be game changing for a SOC. This liberates your critical detection engineering team, allowing them the bandwidth to actually research novel threats, assist in threat hunting, and only tune rules when necessary.

Related posts

Explore how Exaforce can help transform your security operations

See what Exabots + humans can do for you