Security incidents rarely arrive neatly labeled.

In modern cloud and SaaS environments, the most difficult situations are not obvious attacks. They appear to be legitimate actions taken with good intentions, but are executed dangerously, manifesting in unexpected ways through trusted identities and from places no one anticipated. These are the moments where even experienced security teams pause, because the signals are real, but the intent is unclear.

This is a real incident we experienced internally at Exaforce. It is anonymized, accurate, and representative of the challenges security teams face every day.

Rapid detection and triage

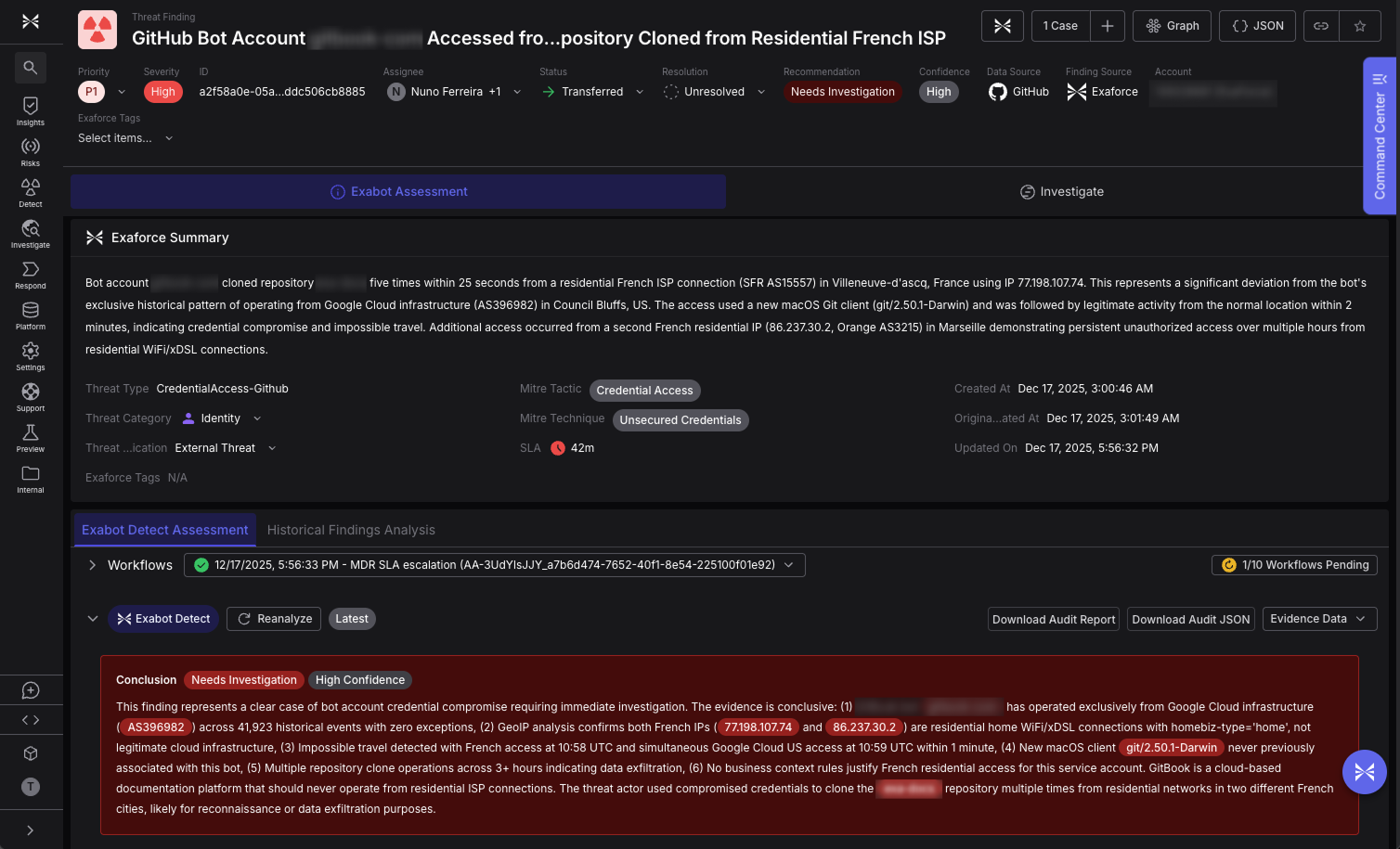

Because we drink our own champagne, our environment ingests live data sources, and a high severity P1 alert surfaced in the platform in real time. Exaforce immediately correlated the underlying signals into a single, aggregated incident, and our MDR process engaged as designed. The alert was triaged within the SLA.

At that moment, the observable telemetry warranted a “treat as breach until proven otherwise” posture. The signals were consistent with compromise, even though we did not yet have enough context to determine whether the activity was malicious or unexpected (but legitimate) third-party behavior.

What the platform observed before any human investigation

The activity involved a non-human identity tied to a third-party SaaS integration. Specifically, a GitHub App token was used to generate a JWT and authenticate access to clone content from our public facing documentation repository to an unknown laptop. Historically, this identity’s behavior was consistent and predictable; that baseline changed abruptly. While this did not involve production code, the pattern is concerning and we are treating it accordingly. Luckily, we maintain strong controls to prevent access to production systems and sensitive production code, and those safeguards are designed to ensure an event like this cannot escalate beyond non-public facing repositories.

The platform observed several deviations occurring together over a short period of time:

- The identity began operating in a new country

- The source network shifted to a residential Internet service provider

- The user agent changed to a workstation-based Git client

- Multiple full repository clone operations occurred in quick succession

- At the same time, the same identity continued operating normally from its usual cloud environment

Individually, none of these signals is rare. Geographic anomalies are noisy. Network changes happen. Repository access can be legitimate. Many security tools alert on these events in isolation, and most teams learn to tune them out. What mattered here was concurrency.

The same identity was active in two different environments at the same time. That overlap created an impossible travel scenario for a bot identity and changed the risk assessment.

Why traditional detection approaches struggle in situations like this

In many environments, this activity would have generated multiple independent alerts:

- A geographic anomaly alert

- A network or autonomous system number (ASN) change alert

- A repository access alert

- Possibly a third-party integration alert

Each alert would be separate and isolated, with different timestamps and limited context. An experienced analyst would need to manually correlate the signals, reconstruct a timeline, and determine whether the activity represented coincidence, misconfiguration, or compromise.

That process takes time. Time is exactly what attackers rely on, and it is also what legitimate-but-unexpected activity consumes.

How Exaforce handled it differently

From this group of signals, Exaforce generated one meaningful incident.

Identity behavior as the starting point

The identity involved was classified as a bot, not a human. Bot identities do not travel, use residential broadband, switch operating systems, or behave interactively. When those patterns appear, they represent a meaningful deviation from expected behavior. This behavioral deviation alone was sufficient to escalate the incident.

Automatic timeline reconstruction

The Exaforce Agentic SOC Platform automatically reconstructed the sequence of events, distinguishing normal, automated activity originating from cloud infrastructure from anomalous, manual activity coming from a residential network, and identifying the temporal overlap that proved simultaneous usage. This reconstruction required no manual queries, dashboards, or log stitching; the timeline was available immediately as part of the incident context.

AI-assisted triage with human clarity

The platform evaluated historical baselines, identity type, access patterns, and environmental context together and reached a straightforward conclusion aligned with how an experienced analyst would reason about the situation: it should be treated as a breach until proven otherwise. That framing allowed the team to act decisively without waiting for perfect certainty.

How the Exaforce team responded

Because the incident was clearly scoped and contextualized, the response was immediate and measured. Within a short window, the P1 incident was reviewed, the managed detection and response (MDR) team engaged directly, the third-party integration was suspended, administrative authentications were revoked, and the vendor’s security team was contacted with forensic evidence; the situation was resolved within hours. The team did not need to debate whether the signals were related, because the platform had already done that work.

The outcome, and why it still matters

After a joint investigation with the vendor, we confirmed the activity was not a breach but a well-intentioned and unsafe support action. An SRE manually cloned our repository to a personal laptop to recover from a vendor-side bug, using a non-human identity to do so. While the intent was to act quickly and restore service, using a bot identity and performing customer code access outside the vendor’s normal production infrastructure, without prior customer notification, was inappropriate and created a valid breach indicator.

In this specific case, the repository contained public-facing platform documentation, so the direct impact was minimal. However, the same pattern applied to a repository containing internal architecture documentation, runbooks, or sensitive data could have had serious consequences. The broader lesson is about how easily legitimate, time-pressured decisions can become indistinguishable from compromise when controls and context are missing, and why this should not happen, even in difficult situations.

From desperate, ambiguous signals to decisive detection

This incident was not a breach, but the signals were real, and the risk was real. If your tools cannot make that distinction quickly and confidently, you are relying on luck and human heroics. The Exaforce platform understood what was happening, why it mattered, and how urgent it was in real time. That is the difference between reacting and being ready.

If you want to see how Exaforce aggregates complex signals into a single, coherent incident, book a demo with Exaforce.